PAS 2.4をAWSにインストールするメモです。

下記の記述及びScreen Captureで、"File Storage" VMが使用されていますが、manifestファイル上はFile Storageを作成せず、S3を使用します。(実際は1VM減っています)

記事の途中で変えたので、不整合が一部残っていますが、気にしないでください。

目次

- CLIのインストール

- TerraformでAWS環境作成

- OpsManagerの設定

- BOSH Directorの設定

- VM Typeの修正

- PASの設定

- PASのデプロイ

- PASへのログイン

- Apps Managerへのアクセス

- PASを使ってみる

- OpsManagerへのSSH

- BOSH CLIの設定

- PASの一時停止

- PASの再開

- BOSH DirectorおよびPASのアンインストール

- AWS環境の削除

CLIのインストール

以下の手順は次のバージョンで確認しています(同じである必要はありません)。

$ terraform --version

Terraform v0.11.11

+ provider.aws v1.60.0

+ provider.random v2.0.0

+ provider.template v1.0.0

+ provider.tls v1.2.0

$ om --version

0.50.0

$ $ jq --version

jq-1.6

$ pivnet --version

0.0.55

$ cf --version

cf バージョン 6.43.0+815ea2f3d.2019-02-20

TerraformでAWS環境作成

最新リリースバージョンのv0.24.0ではいくつか問題があるため、masterブランチを使用します。9a47766dc276d489ff6bb23ae8357b874e7dd625で確認しています。

mkdir install-pas

cd install-pas

git clone https://github.com/pivotal-cf/terraforming-aws.git template

terraform.tfvarsを作成します。

Prerequisitesに記載されたRoleをもつIAMユーザーアカウントを用意してください。

ops_manager_amiはPivotal NetworkのPivotal Cloud Foundry Ops Manager YAML for AWSから取得可能です。2.4の最新版のイメージを取得するのがオススメです。

terraform.tfvarsを作成

region="ap-northeast-1"

access_key="AKIA*********************"

secret_key="*************************"

availability_zones=["ap-northeast-1a", "ap-northeast-1c", "ap-northeast-1d"]

# <env_name>.<dns_suffix>がベースドメインになります。

env_name="pas"

dns_suffix="bosh.tokyo"

# あえてRDSを使わない

rds_instance_count=0

ops_manager_instance_type="m4.large"

ops_manager_ami="ami-0e19bbc40aae251aa"

hosted_zone="bosh.tokyo"

# Let's encryptを使うか

# ↓のスクリプトで自己署名証明書を生成

# https://raw.githubusercontent.com/aws-quickstart/quickstart-pivotal-cloudfoundry/master/scripts/gen_ssl_certs.sh

# ./gen_ssl_certs.sh exmple.com apps.exmple.com sys.exmple.com uaa.sys.exmple.com login.sys.exmple.com

ssl_cert=<<EOF

-----BEGIN CERTIFICATE-----

************************* (Let's encryptの場合、fullchain.pemの内容)

-----END CERTIFICATE-----

EOF

ssl_private_key=<<EOF

-----BEGIN PRIVATE KEY-----

************************* (Let's encryptの場合は、privkey.pemの内容)

-----END PRIVATE KEY-----

EOF

# 最後に空行必要

env_nameはdns_suffixは想定しているドメイン名に合わせて設定してください。OpsManagerのドメイン名は

pcf.<env_name>.<dns_suffix>になります。

PASの例えば、アプリケーションドメイン名はapps.<env_name>.<dns_suffix>、システムドメイン名はsys.<env_name>.<dns_suffix>になります。例えば、アプリケーションドメイン名を

apps.xyz.example.comにしたい場合はenv_name="xyz"、dns_suffix=example.comに

アプリケーションドメイン名をapps.foo.barにしたい場合はenv_name="foo"、dns_suffix=barにしてください。TLS証明書を取得する場合は、

*.<env_name>.<dns_suffix>、*.apps.<env_name>.<dns_suffix>、*.sys.<env_name>.<dns_suffix>(Spring Cloud Services for PCFまたはSingle Sign-On for PCFを使う場合は*.uaa.sys.<env_name>.<dns_suffix>と*.login.sys.<env_name>.<dns_suffix>も追加)を対象に含めてください。

用意されているTerraformのテンプレートを少し修正します。

Terraformテンプレートを修正

# Spot Instanceを使うためのIAM設定追加

cat <<'EOD' > template/modules/ops_manager/spot-instance.tf

resource "aws_iam_policy" "spot_instance" {

name = "${var.env_name}_spot_instance"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "ec2:RequestSpotInstances",

"Effect": "Allow",

"Resource": "*"

},

{

"Action": "ec2:DescribeSpotInstanceRequests",

"Effect": "Allow",

"Resource": "*"

},

{

"Action": "ec2:CancelSpotInstanceRequests",

"Effect": "Allow",

"Resource": "*"

},

{

"Action": "iam:CreateServiceLinkedRole",

"Effect": "Allow",

"Resource": "*"

}

]

}

EOF

}

resource "aws_iam_user_policy_attachment" "spot_instance" {

user = "${aws_iam_user.ops_manager.name}"

policy_arn = "${aws_iam_policy.spot_instance.arn}"

}

EOD

# TCP LBの削除(節約のため)

sed -i.bk 's|locals {|/* locals {|' template/modules/pas/lbs.tf

echo '*/' >> template/modules/pas/lbs.tf

sed -i.bk 's|resource "aws_route53_record" "tcp" {|/* resource "aws_route53_record" "tcp" {|' template/modules/pas/dns.tf

echo '*/' >> template/modules/pas/dns.tf

sed -i.bk 's/${aws_lb_target_group.tcp.*.name}//g' template/modules/pas/outputs.tf

# ゴミ削除

rm -f `find . -name '*.bk'`

念の為、差分を確認します。

cd template

echo "======== Diff =========="

git diff | cat

echo "========================"

cd -

Terraformを実行します。

terraform init template/terraforming-pas

terraform plan -out plan template/terraforming-pas

terraform apply plan

5-10分くらいでPASのインストールに必要なAWSリソースが作成されます。

- VPC

- Subnet (public, infrastructure, ert, services x 3 AZ)

- Internet Gateway

- NAT Gateway

- RouteTable

- EC2(OpsManager)

- EIP(OpsManager)

- Network Loadbalancer(80, 443)

- Security Group

- Keypair

- IAM

- S3

https://pcf.<env>.<dns_suffix>でOpsManagerにアクセスできます

全てCLIで設定するのでブラウザでは操作しないでください。

OpsManagerの設定

omコマンドでOpsManagerの初期設定を行います。

OpsManager管理者ユーザーを作成します。usernameやpasswordは適当に変更してください。

export OM_USERNAME=admin

export OM_PASSWORD=pasw0rd

export OM_DECRYPTION_PASSPHRASE=pasw0rd

export OM_TARGET=https://$(terraform output ops_manager_dns)

export OM_SKIP_SSL_VALIDATION=true

om configure-authentication \

--username ${OM_USERNAME} \

--password ${OM_PASSWORD} \

--decryption-passphrase ${OM_DECRYPTION_PASSPHRASE}

次の作業は必須ではありませんが、Let's Encryptなどで本物の証明書を使っている場合はOpsManagerにもその証明書を設定できます(デフォルトでは自己署名証明書が使われます)。

# terraform.tfvarsに設定した証明書を使いたい場合は↓を実行

terraform output ssl_cert > cert.pem

terraform output ssl_private_key > private.pem

om update-ssl-certificate \

--certificate-pem="$(cat cert.pem)" \

--private-key-pem="$(cat private.pem)"

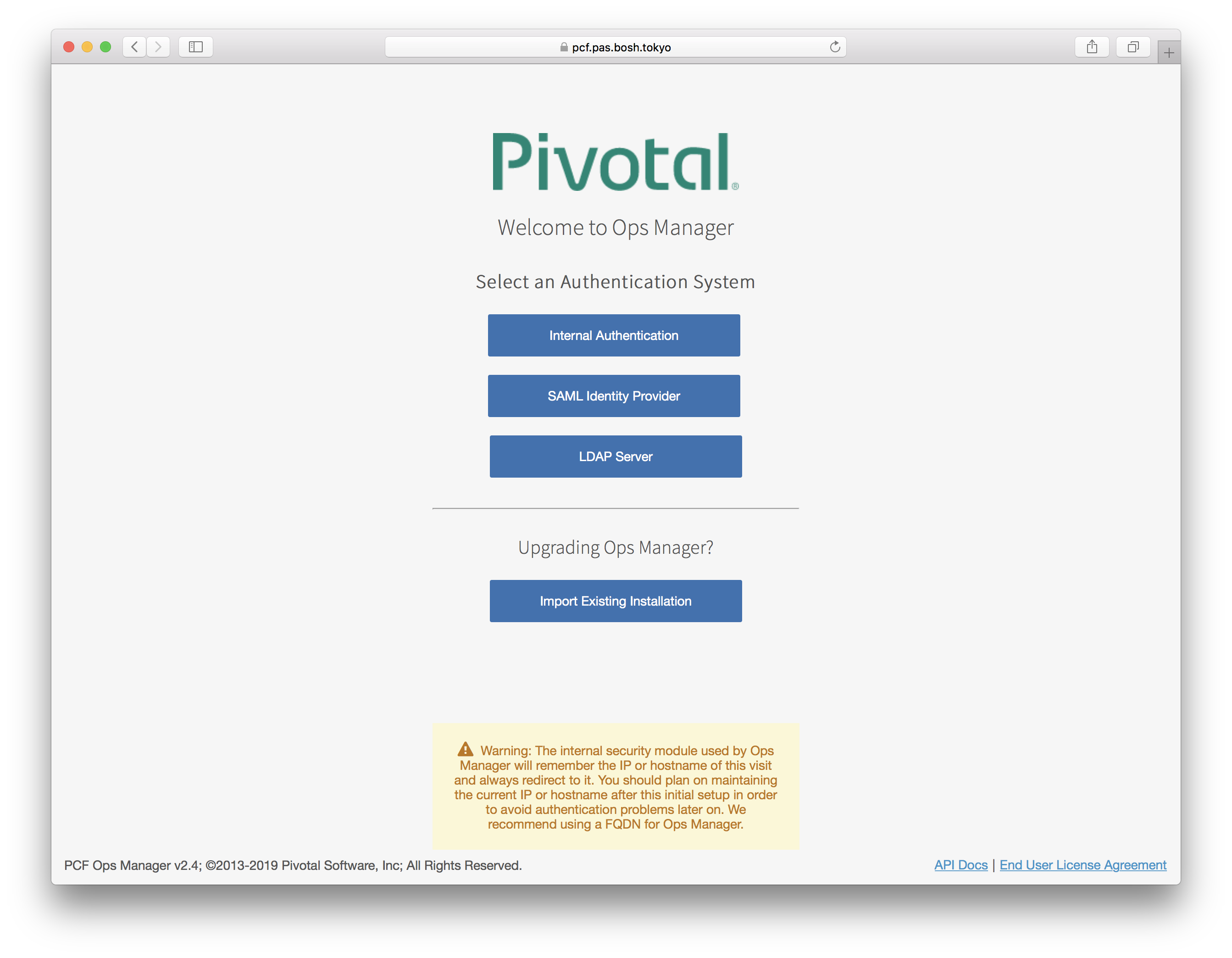

OpsManagerにアクセスするとログイン画面が表示されます。

管理者ユーザーでログインできます。

この時点でのコンポーネント図は次のようになります。

PlantUML(参考)

@startuml

package "public" {

package "az1 (10.0.0.0/24)" {

node "Ops Manager"

rectangle "web-lb-1"

rectangle "ssh-lb-1"

boundary "NAT Gateway"

}

package "az2 (10.0.1.0/24)" {

rectangle "web-lb-2"

rectangle "ssh-lb-2"

}

package "az3 (10.0.2.0/24)" {

rectangle "web-lb-3"

rectangle "ssh-lb-3"

}

}

package "infrastructure" {

package "az2 (10.0.16.16/28)" {

}

package "az3 (10.0.16.32/28)" {

}

package "az1 (10.0.16.0/28)" {

}

}

package "deployment" {

package "az1 (10.0.4.0/24)" {

}

package "az2 (10.0.5.0/24)" {

}

package "az3 (10.0.6.0/24)" {

}

}

package "services" {

package "az3 (10.0.10.0/24)" {

}

package "az2 (10.0.9.0/24)" {

}

package "az1 (10.0.8.0/24)" {

}

}

boundary "Internet Gateway"

actor Operator #green

Operator -[#green]--> [Ops Manager]

public -up-> [Internet Gateway]

infrastructure -> [NAT Gateway]

deployment -up-> [NAT Gateway]

services -up-> [NAT Gateway]

@enduml

BOSH Directorの設定

PAS(Cloud Foundry)のインストール、アップデート、死活監視、自動復旧を担うBOSH Directorをインストールするための設定を行います。

必要な情報はterraformのoutputに含まれているので、terraformのoutputからBOSH Directorを設定するためのパラメータのYAML(vars.yml)を生成します。

vars.ymlの生成

export TF_DIR=$(pwd)

mkdir director

cd director

export ACCESS_KEY_ID=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_iam_user_access_key)

export SECRET_ACCESS_KEY=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_iam_user_secret_key)

export SECURITY_GROUP=$(terraform output --state=${TF_DIR}/terraform.tfstate vms_security_group_id)

export KEY_PAIR_NAME=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_ssh_public_key_name)

export SSH_PRIVATE_KEY=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_ssh_private_key | sed 's/^/ /')

export REGION=$(terraform output --state=${TF_DIR}/terraform.tfstate region)

## Director

export OPS_MANAGER_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_bucket)

export OM_TRUSTED_CERTS=$(echo "$OM_TRUSTED_CERTS" | sed 's/^/ /')

## Networks

export AVAILABILITY_ZONES=$(terraform output --state=${TF_DIR}/terraform.tfstate -json azs | jq -r '.value | map({name: .})' | tr -d '\n' | tr -d '"')

export INFRASTRUCTURE_NETWORK_NAME=pas-infrastructure

export INFRASTRUCTURE_IAAS_IDENTIFIER_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_ids | jq -r '.value[0]')

export INFRASTRUCTURE_NETWORK_CIDR_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_cidrs | jq -r '.value[0]')

export INFRASTRUCTURE_RESERVED_IP_RANGES_0=$(echo $INFRASTRUCTURE_NETWORK_CIDR_0 | sed 's|0/28$|0|g')-$(echo $INFRASTRUCTURE_NETWORK_CIDR_0 | sed 's|0/28$|4|g')

export INFRASTRUCTURE_DNS_0=10.0.0.2

export INFRASTRUCTURE_GATEWAY_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_gateways | jq -r '.value[0]')

export INFRASTRUCTURE_AVAILABILITY_ZONES_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_availability_zones | jq -r '.value[0]')

export INFRASTRUCTURE_IAAS_IDENTIFIER_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_ids | jq -r '.value[1]')

export INFRASTRUCTURE_NETWORK_CIDR_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_cidrs | jq -r '.value[1]')

export INFRASTRUCTURE_RESERVED_IP_RANGES_1=$(echo $INFRASTRUCTURE_NETWORK_CIDR_1 | sed 's|16/28$|16|g')-$(echo $INFRASTRUCTURE_NETWORK_CIDR_1 | sed 's|16/28$|20|g')

export INFRASTRUCTURE_DNS_1=10.0.0.2

export INFRASTRUCTURE_GATEWAY_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_gateways | jq -r '.value[1]')

export INFRASTRUCTURE_AVAILABILITY_ZONES_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_availability_zones | jq -r '.value[1]')

export INFRASTRUCTURE_IAAS_IDENTIFIER_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_ids | jq -r '.value[2]')

export INFRASTRUCTURE_NETWORK_CIDR_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_cidrs | jq -r '.value[2]')

export INFRASTRUCTURE_RESERVED_IP_RANGES_2=$(echo $INFRASTRUCTURE_NETWORK_CIDR_2 | sed 's|32/28$|32|g')-$(echo $INFRASTRUCTURE_NETWORK_CIDR_2 | sed 's|32/28$|36|g')

export INFRASTRUCTURE_DNS_2=10.0.0.2

export INFRASTRUCTURE_GATEWAY_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_gateways | jq -r '.value[2]')

export INFRASTRUCTURE_AVAILABILITY_ZONES_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json infrastructure_subnet_availability_zones | jq -r '.value[2]')

export DEPLOYMENT_NETWORK_NAME=pas-deployment

export DEPLOYMENT_IAAS_IDENTIFIER_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_ids | jq -r '.value[0]')

export DEPLOYMENT_NETWORK_CIDR_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_cidrs | jq -r '.value[0]')

export DEPLOYMENT_RESERVED_IP_RANGES_0=$(echo $DEPLOYMENT_NETWORK_CIDR_0 | sed 's|0/24$|0|g')-$(echo $DEPLOYMENT_NETWORK_CIDR_0 | sed 's|0/24$|4|g')

export DEPLOYMENT_DNS_0=10.0.0.2

export DEPLOYMENT_GATEWAY_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_gateways | jq -r '.value[0]')

export DEPLOYMENT_AVAILABILITY_ZONES_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_availability_zones | jq -r '.value[0]')

export DEPLOYMENT_IAAS_IDENTIFIER_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_ids | jq -r '.value[1]')

export DEPLOYMENT_NETWORK_CIDR_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_cidrs | jq -r '.value[1]')

export DEPLOYMENT_RESERVED_IP_RANGES_1=$(echo $DEPLOYMENT_NETWORK_CIDR_1 | sed 's|0/24$|0|g')-$(echo $DEPLOYMENT_NETWORK_CIDR_1 | sed 's|0/24$|4|g')

export DEPLOYMENT_DNS_1=10.0.0.2

export DEPLOYMENT_GATEWAY_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_gateways | jq -r '.value[1]')

export DEPLOYMENT_AVAILABILITY_ZONES_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_availability_zones | jq -r '.value[1]')

export DEPLOYMENT_IAAS_IDENTIFIER_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_ids | jq -r '.value[2]')

export DEPLOYMENT_NETWORK_CIDR_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_cidrs | jq -r '.value[2]')

export DEPLOYMENT_RESERVED_IP_RANGES_2=$(echo $DEPLOYMENT_NETWORK_CIDR_2 | sed 's|0/24$|0|g')-$(echo $DEPLOYMENT_NETWORK_CIDR_2 | sed 's|0/24$|4|g')

export DEPLOYMENT_DNS_2=10.0.0.2

export DEPLOYMENT_GATEWAY_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_gateways | jq -r '.value[2]')

export DEPLOYMENT_AVAILABILITY_ZONES_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json pas_subnet_availability_zones | jq -r '.value[2]')

export SERVICES_NETWORK_NAME=pas-services

export SERVICES_IAAS_IDENTIFIER_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_ids | jq -r '.value[0]')

export SERVICES_NETWORK_CIDR_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_cidrs | jq -r '.value[0]')

export SERVICES_RESERVED_IP_RANGES_0=$(echo $SERVICES_NETWORK_CIDR_0 | sed 's|0/24$|0|g')-$(echo $SERVICES_NETWORK_CIDR_0 | sed 's|0/24$|3|g')

export SERVICES_DNS_0=10.0.0.2

export SERVICES_GATEWAY_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_gateways | jq -r '.value[0]')

export SERVICES_AVAILABILITY_ZONES_0=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_availability_zones | jq -r '.value[0]')

export SERVICES_IAAS_IDENTIFIER_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_ids | jq -r '.value[1]')

export SERVICES_NETWORK_CIDR_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_cidrs | jq -r '.value[1]')

export SERVICES_RESERVED_IP_RANGES_1=$(echo $SERVICES_NETWORK_CIDR_1 | sed 's|0/24$|0|g')-$(echo $SERVICES_NETWORK_CIDR_1 | sed 's|0/24$|3|g')

export SERVICES_DNS_1=10.0.0.2

export SERVICES_GATEWAY_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_gateways | jq -r '.value[1]')

export SERVICES_AVAILABILITY_ZONES_1=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_availability_zones | jq -r '.value[1]')

export SERVICES_IAAS_IDENTIFIER_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_ids | jq -r '.value[2]')

export SERVICES_NETWORK_CIDR_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_cidrs | jq -r '.value[2]')

export SERVICES_RESERVED_IP_RANGES_2=$(echo $SERVICES_NETWORK_CIDR_2 | sed 's|0/24$|0|g')-$(echo $SERVICES_NETWORK_CIDR_2 | sed 's|0/24$|3|g')

export SERVICES_DNS_2=10.0.0.2

export SERVICES_GATEWAY_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_gateways | jq -r '.value[2]')

export SERVICES_AVAILABILITY_ZONES_2=$(terraform output --state=${TF_DIR}/terraform.tfstate -json services_subnet_availability_zones | jq -r '.value[2]')

## vm extensions

export WEB_LB_SECURITY_GROUP=$(cat $TF_DIR/terraform.tfstate | jq -r '.modules[] | select(.resources["aws_security_group.web_lb"] != null).resources["aws_security_group.web_lb"].primary.attributes.name')

export SSH_LB_SECURITY_GROUP=$(cat $TF_DIR/terraform.tfstate | jq -r '.modules[] | select(.resources["aws_security_group.ssh_lb"] != null).resources["aws_security_group.ssh_lb"].primary.attributes.name')

export TCP_LB_SECURITY_GROUP=$(cat $TF_DIR/terraform.tfstate | jq -r '.modules[] | select(.resources["aws_security_group.tcp_lb"] != null).resources["aws_security_group.tcp_lb"].primary.attributes.name')

export VMS_SECURITY_GROUP=$(cat $TF_DIR/terraform.tfstate | jq -r '.modules[] | select(.resources["aws_security_group.vms_security_group"] != null).resources["aws_security_group.vms_security_group"].primary.attributes.name')

export WEB_TARGET_GROUPS="[$(terraform output --state=${TF_DIR}/terraform.tfstate web_target_groups | tr -d '\n')]"

export SSH_TARGET_GROUPS="[$(terraform output --state=${TF_DIR}/terraform.tfstate ssh_target_groups | tr -d '\n')]"

export TCP_TARGET_GROUPS="[$(terraform output --state=${TF_DIR}/terraform.tfstate tcp_target_groups | tr -d '\n')]"

cat <<EOF > vars.yml

access_key_id: ${ACCESS_KEY_ID}

secret_access_key: ${SECRET_ACCESS_KEY}

security_group: ${SECURITY_GROUP}

key_pair_name: ${KEY_PAIR_NAME}

ssh_private_key: |

${SSH_PRIVATE_KEY}

region: ${REGION}

ops_manager_bucket: ${OPS_MANAGER_BUCKET}

infrastructure_network_name: ${INFRASTRUCTURE_NETWORK_NAME}

om_trusted_certs: ${OM_TRUSTED_CERTS}

# infrastructure

infrastructure_network_name: ${INFRASTRUCTURE_NETWORK_NAME}

infrastructure_iaas_identifier_0: ${INFRASTRUCTURE_IAAS_IDENTIFIER_0}

infrastructure_network_cidr_0: ${INFRASTRUCTURE_NETWORK_CIDR_0}

infrastructure_reserved_ip_ranges_0: ${INFRASTRUCTURE_RESERVED_IP_RANGES_0}

infrastructure_dns_0: ${INFRASTRUCTURE_DNS_0}

infrastructure_gateway_0: ${INFRASTRUCTURE_GATEWAY_0}

infrastructure_availability_zones_0: ${INFRASTRUCTURE_AVAILABILITY_ZONES_0}

infrastructure_iaas_identifier_1: ${INFRASTRUCTURE_IAAS_IDENTIFIER_1}

infrastructure_network_cidr_1: ${INFRASTRUCTURE_NETWORK_CIDR_1}

infrastructure_reserved_ip_ranges_1: ${INFRASTRUCTURE_RESERVED_IP_RANGES_1}

infrastructure_dns_1: ${INFRASTRUCTURE_DNS_1}

infrastructure_gateway_1: ${INFRASTRUCTURE_GATEWAY_1}

infrastructure_availability_zones_1: ${INFRASTRUCTURE_AVAILABILITY_ZONES_1}

infrastructure_iaas_identifier_2: ${INFRASTRUCTURE_IAAS_IDENTIFIER_2}

infrastructure_network_cidr_2: ${INFRASTRUCTURE_NETWORK_CIDR_2}

infrastructure_reserved_ip_ranges_2: ${INFRASTRUCTURE_RESERVED_IP_RANGES_2}

infrastructure_dns_2: ${INFRASTRUCTURE_DNS_2}

infrastructure_gateway_2: ${INFRASTRUCTURE_GATEWAY_2}

infrastructure_availability_zones_2: ${INFRASTRUCTURE_AVAILABILITY_ZONES_2}

# deployment

deployment_network_name: ${DEPLOYMENT_NETWORK_NAME}

deployment_iaas_identifier_0: ${DEPLOYMENT_IAAS_IDENTIFIER_0}

deployment_network_cidr_0: ${DEPLOYMENT_NETWORK_CIDR_0}

deployment_reserved_ip_ranges_0: ${DEPLOYMENT_RESERVED_IP_RANGES_0}

deployment_dns_0: ${DEPLOYMENT_DNS_0}

deployment_gateway_0: ${DEPLOYMENT_GATEWAY_0}

deployment_availability_zones_0: ${DEPLOYMENT_AVAILABILITY_ZONES_0}

deployment_iaas_identifier_1: ${DEPLOYMENT_IAAS_IDENTIFIER_1}

deployment_network_cidr_1: ${DEPLOYMENT_NETWORK_CIDR_1}

deployment_reserved_ip_ranges_1: ${DEPLOYMENT_RESERVED_IP_RANGES_1}

deployment_dns_1: ${DEPLOYMENT_DNS_1}

deployment_gateway_1: ${DEPLOYMENT_GATEWAY_1}

deployment_availability_zones_1: ${DEPLOYMENT_AVAILABILITY_ZONES_1}

deployment_iaas_identifier_2: ${DEPLOYMENT_IAAS_IDENTIFIER_2}

deployment_network_cidr_2: ${DEPLOYMENT_NETWORK_CIDR_2}

deployment_reserved_ip_ranges_2: ${DEPLOYMENT_RESERVED_IP_RANGES_2}

deployment_dns_2: ${DEPLOYMENT_DNS_2}

deployment_gateway_2: ${DEPLOYMENT_GATEWAY_2}

deployment_availability_zones_2: ${DEPLOYMENT_AVAILABILITY_ZONES_2}

# services

services_network_name: ${SERVICES_NETWORK_NAME}

services_iaas_identifier_0: ${SERVICES_IAAS_IDENTIFIER_0}

services_network_cidr_0: ${SERVICES_NETWORK_CIDR_0}

services_reserved_ip_ranges_0: ${SERVICES_RESERVED_IP_RANGES_0}

services_dns_0: ${SERVICES_DNS_0}

services_gateway_0: ${SERVICES_GATEWAY_0}

services_availability_zones_0: ${SERVICES_AVAILABILITY_ZONES_0}

services_iaas_identifier_1: ${SERVICES_IAAS_IDENTIFIER_1}

services_network_cidr_1: ${SERVICES_NETWORK_CIDR_1}

services_reserved_ip_ranges_1: ${SERVICES_RESERVED_IP_RANGES_1}

services_dns_1: ${SERVICES_DNS_1}

services_gateway_1: ${SERVICES_GATEWAY_1}

services_availability_zones_1: ${SERVICES_AVAILABILITY_ZONES_1}

services_iaas_identifier_2: ${SERVICES_IAAS_IDENTIFIER_2}

services_network_cidr_2: ${SERVICES_NETWORK_CIDR_2}

services_reserved_ip_ranges_2: ${SERVICES_RESERVED_IP_RANGES_2}

services_dns_2: ${SERVICES_DNS_2}

services_gateway_2: ${SERVICES_GATEWAY_2}

services_availability_zones_2: ${SERVICES_AVAILABILITY_ZONES_2}

# vm extensions

web_lb_security_group: ${WEB_LB_SECURITY_GROUP}

ssh_lb_security_group: ${SSH_LB_SECURITY_GROUP}

tcp_lb_security_group: ${TCP_LB_SECURITY_GROUP}

vms_security_group: ${VMS_SECURITY_GROUP}

web_target_groups: ${WEB_TARGET_GROUPS}

ssh_target_groups: ${SSH_TARGET_GROUPS}

tcp_target_groups: ${TCP_TARGET_GROUPS}

EOF

次にBOSH Directorの設定ファイル(config.yml)を作成します。

config.ymlの生成

cat <<EOF > config.yml

# Availability Zoneの定義

az-configuration:

- name: ((infrastructure_availability_zones_0))

- name: ((infrastructure_availability_zones_1))

- name: ((infrastructure_availability_zones_2))

# Networkの定義

networks-configuration:

icmp_checks_enabled: false

networks:

# BOSH Directorをインストールするネットワーク

- name: ((infrastructure_network_name))

subnets:

- iaas_identifier: ((infrastructure_iaas_identifier_0))

cidr: ((infrastructure_network_cidr_0))

dns: ((infrastructure_dns_0))

gateway: ((infrastructure_gateway_0))

reserved_ip_ranges: ((infrastructure_reserved_ip_ranges_0))

availability_zone_names:

- ((infrastructure_availability_zones_0))

- iaas_identifier: ((infrastructure_iaas_identifier_1))

cidr: ((infrastructure_network_cidr_1))

dns: ((infrastructure_dns_1))

gateway: ((infrastructure_gateway_1))

reserved_ip_ranges: ((infrastructure_reserved_ip_ranges_1))

availability_zone_names:

- ((infrastructure_availability_zones_1))

- iaas_identifier: ((infrastructure_iaas_identifier_2))

cidr: ((infrastructure_network_cidr_2))

dns: ((infrastructure_dns_2))

gateway: ((infrastructure_gateway_2))

reserved_ip_ranges: ((infrastructure_reserved_ip_ranges_2))

availability_zone_names:

- ((infrastructure_availability_zones_2))

# PAS含む主なProductをインストールするネットワーク

- name: ((deployment_network_name))

subnets:

- iaas_identifier: ((deployment_iaas_identifier_0))

cidr: ((deployment_network_cidr_0))

dns: ((deployment_dns_0))

gateway: ((deployment_gateway_0))

reserved_ip_ranges: ((deployment_reserved_ip_ranges_0))

availability_zone_names:

- ((deployment_availability_zones_0))

- iaas_identifier: ((deployment_iaas_identifier_1))

cidr: ((deployment_network_cidr_1))

dns: ((deployment_dns_1))

gateway: ((deployment_gateway_1))

reserved_ip_ranges: ((deployment_reserved_ip_ranges_1))

availability_zone_names:

- ((deployment_availability_zones_1))

- iaas_identifier: ((deployment_iaas_identifier_2))

cidr: ((deployment_network_cidr_2))

dns: ((deployment_dns_2))

gateway: ((deployment_gateway_2))

reserved_ip_ranges: ((deployment_reserved_ip_ranges_2))

availability_zone_names:

- ((deployment_availability_zones_2))

# オンデマンドサービス(MySQL, PCCなど)をインストールするネットワーク

- name: ((services_network_name))

subnets:

- iaas_identifier: ((services_iaas_identifier_0))

cidr: ((services_network_cidr_0))

dns: ((services_dns_0))

gateway: ((services_gateway_0))

reserved_ip_ranges: ((services_reserved_ip_ranges_0))

availability_zone_names:

- ((services_availability_zones_0))

- iaas_identifier: ((services_iaas_identifier_1))

cidr: ((services_network_cidr_1))

dns: ((services_dns_1))

gateway: ((services_gateway_1))

reserved_ip_ranges: ((services_reserved_ip_ranges_1))

availability_zone_names:

- ((services_availability_zones_1))

- iaas_identifier: ((services_iaas_identifier_2))

cidr: ((services_network_cidr_2))

dns: ((services_dns_2))

gateway: ((services_gateway_2))

reserved_ip_ranges: ((services_reserved_ip_ranges_2))

availability_zone_names:

- ((services_availability_zones_2))

# BOSH Directorをどのネットワークに配置するかの設定

network-assignment:

network:

name: ((infrastructure_network_name))

other_availability_zones: []

# singleton_availability_zoneは、スケールアウトできないコンポーネントの配置先AZ。BOSH Directorはスケールアウトできないのでここの設定で配置先が決まる。

singleton_availability_zone:

name: ((infrastructure_availability_zones_0))

# 対象のソフトウェア(ここではBOSH Director)の詳細設定

properties-configuration:

director_configuration:

allow_legacy_agents: true

bosh_recreate_on_next_deploy: false

bosh_recreate_persistent_disks_on_next_deploy: false

database_type: internal

director_worker_count: 5

encryption:

keys: []

providers: []

hm_emailer_options:

enabled: false

hm_pager_duty_options:

enabled: false

identification_tags: {}

keep_unreachable_vms: false

ntp_servers_string: 0.amazon.pool.ntp.org, 1.amazon.pool.ntp.org, 2.amazon.pool.ntp.org, 3.amazon.pool.ntp.org

post_deploy_enabled: true

resurrector_enabled: true

retry_bosh_deploys: false

# BOSH DirectorのBlobstoreにS3を使用

s3_blobstore_options:

endpoint: https://s3-((region)).amazonaws.com

bucket_name: ((ops_manager_bucket))

access_key: ((access_key_id))

secret_key: ((secret_access_key))

signature_version: "4"

region: ((region))

iaas_configuration:

access_key_id: ((access_key_id))

secret_access_key: ((secret_access_key))

security_group: ((security_group))

key_pair_name: ((key_pair_name))

ssh_private_key: ((ssh_private_key))

region: ((region))

dns_configuration:

excluded_recursors: []

handlers: []

security_configuration:

generate_vm_passwords: true

opsmanager_root_ca_trusted_certs: true

trusted_certificates: ((om_trusted_certs))

syslog_configuration:

enabled: false

resource-configuration:

compilation:

instances: automatic

instance_type:

id: automatic

internet_connected: false

director:

instances: automatic

persistent_disk:

size_mb: automatic

instance_type:

id: automatic

internet_connected: false

# VMごとに設定可能な、IaaS固有の拡張項目

# 設定可能な項目は次のドキュメント参照

# https://bosh.io/docs/aws-cpi/#resource-pools

vmextensions-configuration:

# Spot InstanceのVM Type毎の価格設定

- name: spot-instance-m4-large

cloud_properties:

spot_bid_price: 0.0340

spot_ondemand_fallback: true

- name: spot-instance-m4-xlarge

cloud_properties:

spot_bid_price: 0.0670

spot_ondemand_fallback: true

- name: spot-instance-t2-micro

cloud_properties:

spot_bid_price: 0.0050

spot_ondemand_fallback: true

- name: spot-instance-t2-small

cloud_properties:

spot_bid_price: 0.0095

spot_ondemand_fallback: true

- name: spot-instance-t2-medium

cloud_properties:

spot_bid_price: 0.0190

spot_ondemand_fallback: true

- name: spot-instance-r4-xlarge

cloud_properties:

spot_bid_price: 0.0700

spot_ondemand_fallback: true

- name: pas-web-lb

cloud_properties:

# GoRouterとNLBをつなぐための設定

lb_target_groups: ((web_target_groups))

# NLBを使う場合はLBではなくInstanceに対してSecurityGroupを設定する必要がある

security_groups:

- ((web_lb_security_group))

- ((vms_security_group))

- name: pas-ssh-lb

cloud_properties:

# SSH ProxyとNLBをつなぐための設定

lb_target_groups: ((ssh_target_groups))

# NLBを使う場合はLBではなくInstanceに対してSecurityGroupを設定する必要がある

security_groups:

- ((ssh_lb_security_group))

- ((vms_security_group))

- name: pas-tcp-lb

cloud_properties:

# TCP RouterとNLBをつなぐための設定

lb_target_groups: ((tcp_target_groups))

# NLBを使う場合はLBではなくInstanceに対してSecurityGroupを設定する必要がある

security_groups:

- ((tcp_lb_security_group))

- ((vms_security_group))

EOF

config.ymlとvars.ymlを使ってBOSH Directorの設定を行います。

cd ..

om configure-director \

--config director/config.yml \

--vars-file director/vars.yml

出力結果

started configuring director options for bosh tile

finished configuring director options for bosh tile

started configuring availability zone options for bosh tile

finished configuring availability zone options for bosh tile

started configuring network options for bosh tile

finished configuring network options for bosh tile

started configuring network assignment options for bosh tile

finished configuring network assignment options for bosh tile

started configuring resource options for bosh tile

applying resource configuration for the following jobs:

compilation

director

finished configuring resource options for bosh tile

started configuring vm extensions

applying vm-extensions configuration for the following:

spot-instance-m4-large

spot-instance-m4-xlarge

spot-instance-t2-micro

spot-instance-t2-small

spot-instance-t2-medium

spot-instance-r4-xlarge

pas-web-lb

pas-ssh-lb

pas-tcp-lb

finished configuring vm extensions

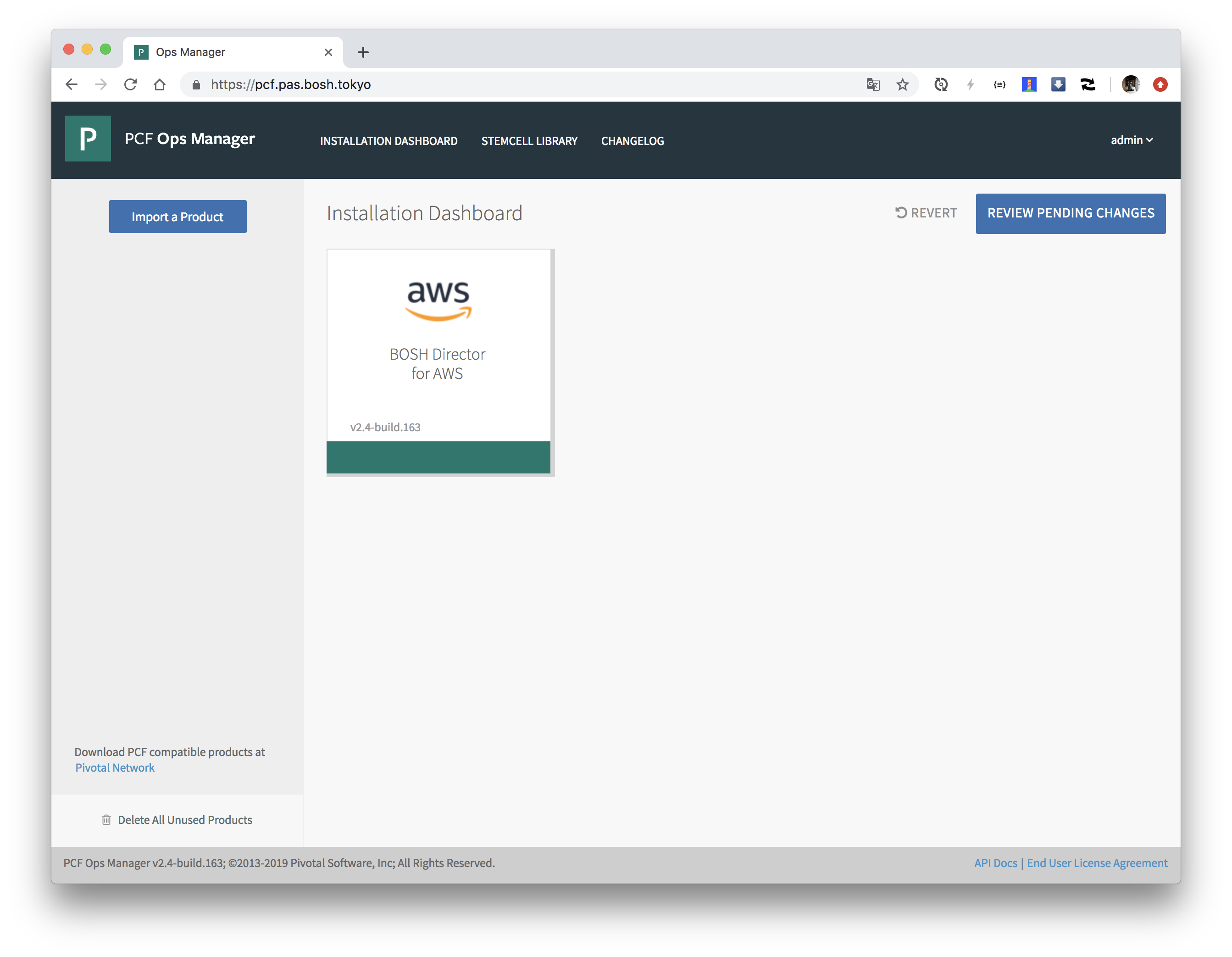

オレンジ色だった箇所が緑色になれば設定完了です。

VM Typeの修正

必須ではないですが、オススメです。

presetされているvm_typeのメモリサイズやCPU数が一部間違っている(実際よりスペックが低く設定されている)のを正しい値に修正します...

間違ったままだと、実際には問題ないスペックでも、OpsManager上でスペックのバリデーションでエラーになるため、より大きいvm typeを指定する必要があります。より小さく始めるために、この設定を行います。

まずは既存の設定をダウンロードします。

om curl -s -p /api/v0/vm_types > director/vm_types.json

director/vm_types.jsonを開いて、次のの項目を修正してください。

t2.medium

{

"name": "t2.medium",

"ram": 4096,

"cpu": 2,

"ephemeral_disk": 32768,

"raw_instance_storage": false,

"builtin": false

},

m4.large

{

"name": "m4.large",

"ram": 8192,

"cpu": 2,

"ephemeral_disk": 32768,

"raw_instance_storage": false,

"builtin": false

},

m4.xlarge

{

"name": "m4.xlarge",

"ram": 16384,

"cpu": 4,

"ephemeral_disk": 65536,

"raw_instance_storage": false,

"builtin": false

},

変更後のdirector/vm_types.jsonを適用します。

om curl -s -p /api/v0/vm_types -x PUT -d "$(cat director/vm_types.json)"

PASの設定

まずはPASをPivotal Networkからダウンロードします。

PIVNET_TOKENはPivotal NetworkのProfileページから取得できます。

PIVNET_TOKEN=***********************************************

pivnet login --api-token=${PIVNET_TOKEN}

出力結果

Logged-in successfully

pivnet CLIでPASをダウンロードします。ダウンロードしたいバージョンは-rで指定します。

pivnet product-filesでダウンロード対象のファイル群を確認できます。

pivnet product-files -p elastic-runtime -r 2.4.4

出力結果

+--------+--------------------------------+---------------+---------------------+------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

| ID | NAME | FILE VERSION | FILE TYPE | SHA256 | AWS OBJECT KEY |

+--------+--------------------------------+---------------+---------------------+------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

| 277712 | PCF Pivotal Application | 2.4 | Open Source License | | product-files/elastic-runtime/open_source_license_cf-2.4.0-build.360-bbfc877-1545144449.txt |

| | Service v2.4 OSL | | | | |

| 294717 | GCP Terraform Templates 0.63.0 | 0.63.0 | Software | 8d4988c396821d4d0cfb66a4ef470e759ee1ead76b8a06bb8081ff67d710c47d | product-files/elastic-runtime/terraforming-gcp-0.63.0.zip |

| 277284 | Azure Terraform Templates | 0.29.0 | Software | 2c39ccfbe6398ca1570c19f59d3352698255f9639f185c9ab4b3d3faf5268d87 | product-files/elastic-runtime/terraforming-azure-0.29.0.zip |

| | 0.29.0 | | | | |

| 303790 | AWS Terraform Templates 0.24.0 | 0.24.0 | Software | d5175c49bc2caf9a131ceabe6d6a0cd1a92f1a56b539ada09261d89300e57cf5 | product-files/elastic-runtime/terraforming-aws-0.24.0.zip |

| 317727 | Small Footprint PAS | 2.4.4-build.2 | Software | 4ccd8080c3e383d4359208ca1849322a857dbb5d03cc96cfb16ec0fe7b90a6f5 | product-files/elastic-runtime/srt-2.4.4-build.2.pivotal |

| 316657 | CF CLI 6.43.0 | 6.43.0 | Software | 7233a65ff66c0db3339b24e3d71a16cf74187b9d76d45c47b0f1840acf2cf303 | product-files/elastic-runtime/cf-cli-6.43.0.zip |

| 317700 | Pivotal Application Service | 2.4.4-build.2 | Software | 04b381f83736e3d3af398bd6efbbf06dff986c6706371aac6b7f64863ee90d1f | product-files/elastic-runtime/cf-2.4.4-build.2.pivotal |

+--------+--------------------------------+---------------+---------------------+------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

.pivotalで終わるファイルがPASの実体です(srtの方はSmall Footprint Runtime版です)。pivnet donwload-product-filesコマンドでダウンロードできます。

pivnet download-product-files -p elastic-runtime -r 2.4.4 --glob=cf-*.pivotal

出力結果

2019/03/14 00:05:39 Downloading 'cf-2.4.4-build.2.pivotal' to 'cf-2.4.4-build.2.pivotal'

13.97 GiB / 13.97 GiB [==========================================] 100.00% 5m9s

2019/03/14 00:10:53 Verifying SHA256

2019/03/14 00:11:49 Successfully verified SHA256

ダウンロードしたファイルをOpsManagerにアップロードします。

om upload-product -p cf-2.4.4-build.2.pivotal

出力結果

processing product

beginning product upload to Ops Manager

13.97 GiB / 13.97 GiB [=========================================] 100.00% 10m3s

4m26s elapsed, waiting for response from Ops Manager...

finished upload

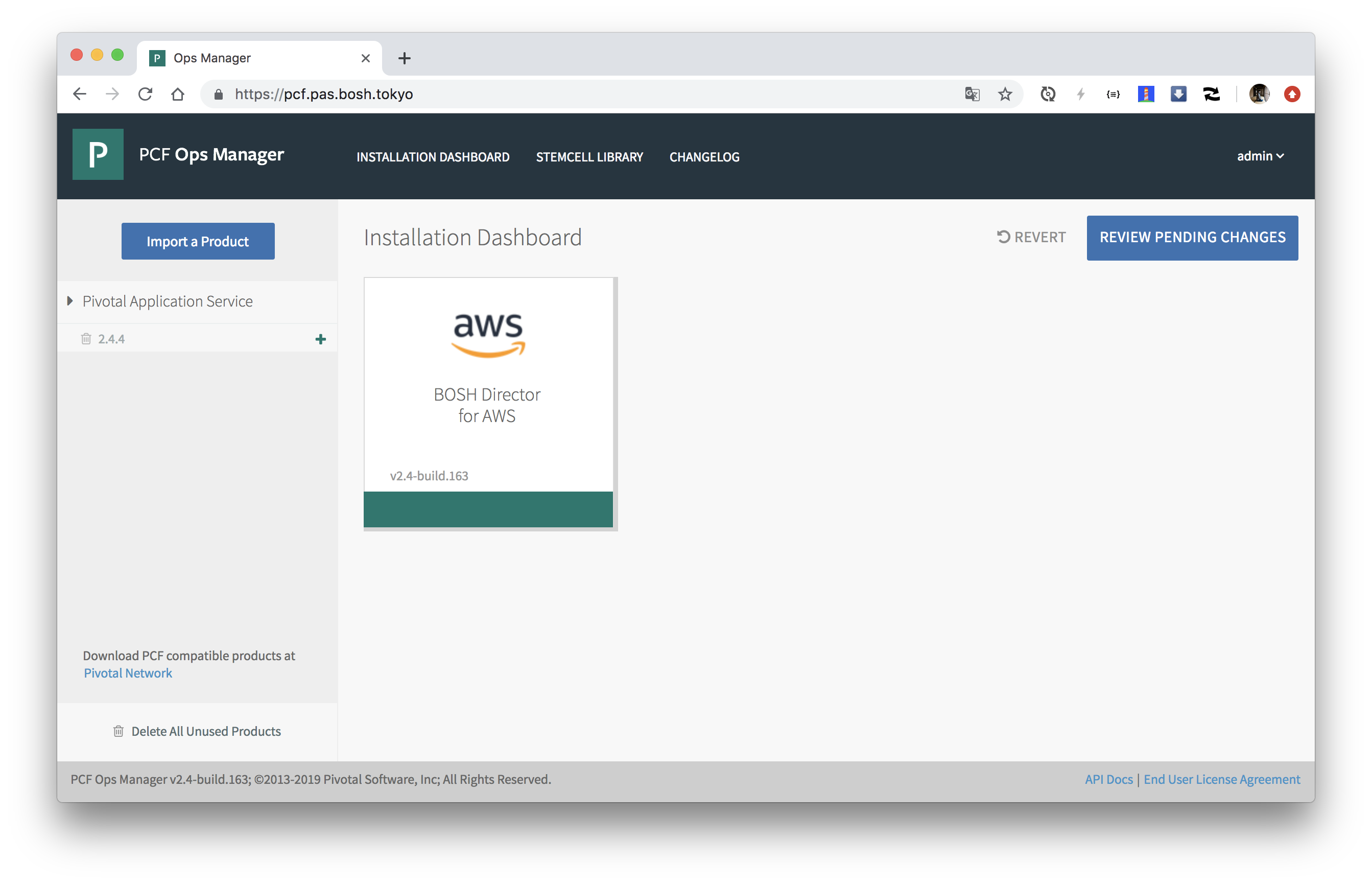

アップロードされたプロダクトはOpsManagerの左側に表示されます。

アップロードされたプロダクトをステージングします。(画面上で"+"ボタンを押すだけですが、あえてCLIで実行します。)

export PRODUCT_NAME=cf

export PRODUCT_VERSION=2.4.4

om stage-product -p ${PRODUCT_NAME} -v ${PRODUCT_VERSION}

出力結果

staging cf 2.4.4

finished staging

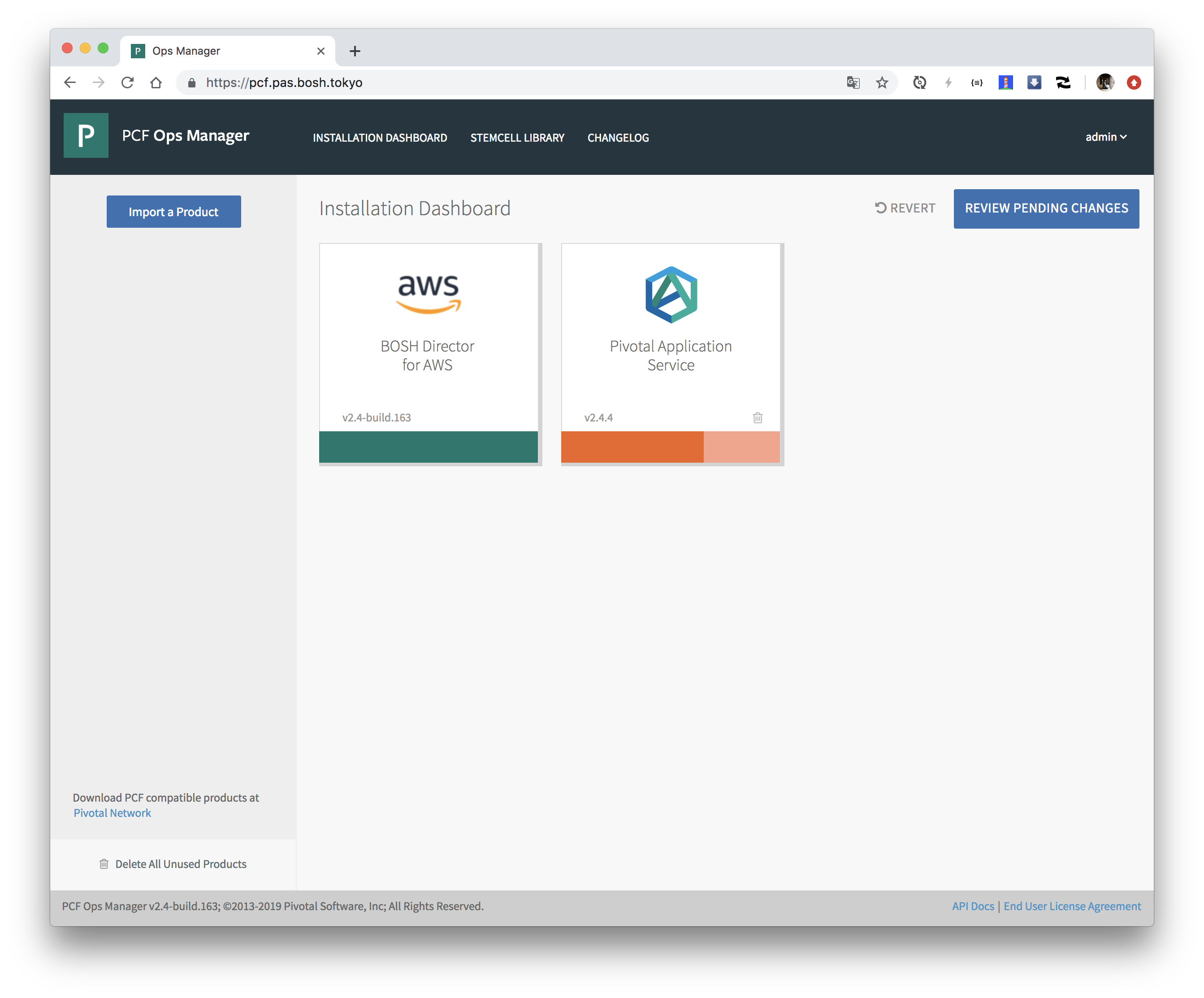

PASの設定画面が出ます。オレンジ色なので設定未完です。

BOSH Directorの設定と同様に、terraformのoutputからPASを設定するためのパラメータのYAML(vars.yml)を生成します。

vars.ymlの生成

export TF_DIR=$(pwd)

mkdir pas

cd pas

export SINGLETON_AVAILABILITY_ZONE=$(terraform output --state=${TF_DIR}/terraform.tfstate --json azs | jq -r '.value[0]')

export AVAILABILITY_ZONES=$(terraform output --state=${TF_DIR}/terraform.tfstate --json azs | jq -r '.value | map({name: .})' | tr -d '\n' | tr -d '"')

export AVAILABILITY_ZONE_NAMES=$(terraform output --state=${TF_DIR}/terraform.tfstate azs | tr -d '\n' | tr -d '"')

export SYSTEM_DOMAIN=$(terraform output --state=${TF_DIR}/terraform.tfstate sys_domain)

export PAS_MAIN_NETWORK_NAME=pas-deployment

export PAS_SERVICES_NETWORK_NAME=pas-services

export APPS_DOMAIN=$(terraform output --state=${TF_DIR}/terraform.tfstate apps_domain)

export SYSTEM_DOMAIN=$(terraform output --state=${TF_DIR}/terraform.tfstate sys_domain)

export WEB_TARGET_GROUPS=$(terraform output --state=${TF_DIR}/terraform.tfstate web_target_groups | tr -d '\n')

export SSH_TARGET_GROUPS=$(terraform output --state=${TF_DIR}/terraform.tfstate ssh_target_groups | tr -d '\n')

export TCP_TARGET_GROUPS=$(terraform output --state=${TF_DIR}/terraform.tfstate tcp_target_groups | tr -d '\n')

export ACCESS_KEY_ID=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_iam_user_access_key)

export SECRET_ACCESS_KEY=$(terraform output --state=${TF_DIR}/terraform.tfstate ops_manager_iam_user_secret_key)

export S3_ENDPOINT=https://s3.$(terraform output --state=${TF_DIR}/terraform.tfstate region).amazonaws.com

export S3_REGION=$(terraform output --state=${TF_DIR}/terraform.tfstate region)

export PAS_BUILDPACKS_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_buildpacks_bucket)

export PAS_DROPLETS_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_droplets_bucket)

export PAS_PACKAGES_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_packages_bucket)

export PAS_RESOURCES_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_resources_bucket)

export PAS_BUILDPACKS_BACKUP_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_buildpacks_backup_bucket)

export PAS_DROPLETS_BACKUP_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_droplets_backup_bucket)

export PAS_PACKAGES_BACKUP_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_packages_backup_bucket)

export PAS_RESOURCES_BACKUP_BUCKET=$(terraform output --state=${TF_DIR}/terraform.tfstate pas_resources_backup_bucket)

export BLOBSTORE_KMS_KEY_ID=$(terraform output --state=${TF_DIR}/terraform.tfstate blobstore_kms_key_id)

export CERT_PEM=`cat <<EOF | sed 's/^/ /'

$(terraform output --state=${TF_DIR}/terraform.tfstate ssl_cert)

EOF

`

export KEY_PEM=`cat <<EOF | sed 's/^/ /'

$(terraform output --state=${TF_DIR}/terraform.tfstate ssl_private_key)

EOF

`

cat <<EOF > vars.yml

cert_pem: |

${CERT_PEM}

key_pem: |

${KEY_PEM}

availability_zone_names: ${AVAILABILITY_ZONE_NAMES}

pas_main_network_name: ${PAS_MAIN_NETWORK_NAME}

pas_services_network_name: ${PAS_SERVICES_NETWORK_NAME}

availability_zones: ${AVAILABILITY_ZONES}

singleton_availability_zone: ${SINGLETON_AVAILABILITY_ZONE}

apps_domain: ${APPS_DOMAIN}

system_domain: ${SYSTEM_DOMAIN}

web_target_groups: ${WEB_TARGET_GROUPS}

ssh_target_groups: ${SSH_TARGET_GROUPS}

tcp_target_groups: ${TCP_TARGET_GROUPS}

access_key_id: ${ACCESS_KEY_ID}

secret_access_key: ${SECRET_ACCESS_KEY}

s3_endpoint: ${S3_ENDPOINT}

s3_region: ${S3_REGION}

pas_buildpacks_bucket: ${PAS_BUILDPACKS_BUCKET}

pas_droplets_bucket: ${PAS_DROPLETS_BUCKET}

pas_packages_bucket: ${PAS_PACKAGES_BUCKET}

pas_resources_bucket: ${PAS_RESOURCES_BUCKET}

blobstore_kms_key_id: ${BLOBSTORE_KMS_KEY_ID}

smtp_from: ${SMTP_FROM}

smtp_address: ${SMTP_ADDRESS}

smtp_port: ${SMTP_PORT}

smtp_username: ${SMTP_USERNAME}

smtp_password: ${SMTP_PASSWORD}

smtp_enable_starttls: ${SMTP_ENABLE_STARTTLS}

EOF

次にPASの設定ファイル(config.yml)を作成します。

config.ymlの生成

cat <<EOF > config.yml

product-name: cf

product-properties:

# Systemドメイン

.cloud_controller.system_domain:

value: ((system_domain))

# Appsドメイン

.cloud_controller.apps_domain:

value: ((apps_domain))

# TLS終端の設定。ここではGoRouterでTerminationする。

.properties.routing_tls_termination:

value: router

# HA Proxyの設定(使用しないので無視)

.properties.haproxy_forward_tls:

value: disable

.ha_proxy.skip_cert_verify:

value: true

# GoRouterで使用するTLS証明書

.properties.networking_poe_ssl_certs:

value:

- name: pas-wildcard

certificate:

cert_pem: ((cert_pem))

private_key_pem: ((key_pem))

# Traffic Controllerのポート番号

.properties.logger_endpoint_port:

value: 443

# PAS 2.4の新機能Dynamic Egress Policiesを有効にする

# https://docs.pivotal.io/pivotalcf/2-4/installing/highlights.html#dynamic-egress

.properties.experimental_dynamic_egress_enforcement:

value: 1

.properties.security_acknowledgement:

value: X

.properties.secure_service_instance_credentials:

value: true

.properties.cf_networking_enable_space_developer_self_service:

value: true

.uaa.service_provider_key_credentials:

value:

cert_pem: ((cert_pem))

private_key_pem: ((key_pem))

.properties.credhub_key_encryption_passwords:

value:

- name: key1

key:

secret: credhubsecret1credhubsecret1

primary: true

.mysql_monitor.recipient_email:

value: notify@example.com

# システムデータベースの設定。ここではExternal(RDSなど)ではなくInternal MySQL(Percona XtraDB Cluster)を選択。

.properties.system_database:

value: internal_pxc

# PXCへの通信をTLSにする

.properties.enable_tls_to_internal_pxc:

value: 1

# UAAのデータベースをInternalにする

.properties.uaa_database:

value: internal_mysql

# UAAのデータベースをInternalにする

.properties.credhub_database:

value: internal_mysql

# Blobstoreの設定。ここではExternal(S3)を選択。

.properties.system_blobstore:

value: external

.properties.system_blobstore.external.endpoint:

value: ((s3_endpoint))

.properties.system_blobstore.external.access_key:

value: ((access_key_id))

.properties.system_blobstore.external.secret_key:

value:

secret: "((secret_access_key))"

.properties.system_blobstore.external.signature_version:

value: 4

.properties.system_blobstore.external.region:

value: ((s3_region))

.properties.system_blobstore.external.buildpacks_bucket:

value: ((pas_buildpacks_bucket))

.properties.system_blobstore.external.droplets_bucket:

value: ((pas_droplets_bucket))

.properties.system_blobstore.external.packages_bucket:

value: ((pas_packages_bucket))

.properties.system_blobstore.external.resources_bucket:

value: ((pas_resources_bucket))

.properties.system_blobstore.external.encryption_kms_key_id:

value: ((blobstore_kms_key_id))

.properties.autoscale_instance_count:

value: 1

# NotificationのためのSMTPの設定(オプション)

.properties.smtp_from:

value: ((smtp_from))

.properties.smtp_address:

value: ((smtp_address))

.properties.smtp_port:

value: ((smtp_port))

.properties.smtp_credentials:

value:

identity: ((smtp_username))

password: ((smtp_password))

.properties.smtp_enable_starttls_auto:

value: ((smtp_enable_starttls))

# PAS 2.4の新機能Metric Registrarを有効にする

# https://docs.pivotal.io/pivotalcf/2-4/installing/highlights.html#metric-registrar

.properties.metric_registrar_enabled:

value: 1

network-properties:

# PASのデプロイ先ネットワーク

network:

name: ((pas_main_network_name))

# オンデマンドサービスのデプロイ先ネットワーク(PASでは使われない)

service_network:

name: ((pas_services_network_name))

# スケールアウト可能なコンポーネントのデプロイ対象AZ

other_availability_zones: ((availability_zones))

# スケールアウトできないコンポーネントのデプロイ対象AZ

singleton_availability_zone:

name: ((singleton_availability_zone))

# VM毎の設定(インスタンス数、VM Type、VM Extension)

resource-config:

nats:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

backup_restore:

instances: 0

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

diego_database:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

uaa:

instances: 1

instance_type:

id: t2.medium

additional_vm_extensions:

- spot-instance-t2-medium

cloud_controller:

instances: 1

instance_type:

id: t2.medium

additional_vm_extensions:

- spot-instance-t2-medium

ha_proxy:

instances: 0

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

router:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

# Web用NLBをアタッチ

- pas-web-lb

mysql_monitor:

instances: 0

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

clock_global:

instances: 1

instance_type:

id: t2.medium

additional_vm_extensions:

- spot-instance-t2-medium

cloud_controller_worker:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

diego_brain:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

# SSH用NLBをアタッチ

- pas-ssh-lb

diego_cell:

instances: 1

instance_type:

id: r4.xlarge

additional_vm_extensions:

- spot-instance-r4-xlarge

loggregator_trafficcontroller:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

syslog_adapter:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

syslog_scheduler:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

doppler:

instances: 1

instance_type:

id: t2.medium

additional_vm_extensions:

- spot-instance-t2-medium

tcp_router:

instances: 0

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

# TCP用NLBをアタッチ

- pas-tcp-lb

credhub:

instances: 1

instance_type:

id: m4.large

additional_vm_extensions:

- spot-instance-m4-large

# Intenal MySQLを使う場合の定義

mysql_proxy:

instances: 1

instance_type:

id: t2.micro

additional_vm_extensions:

- spot-instance-t2-micro

mysql:

instances: 1

instance_type:

id: m4.large

persistent_disk:

size_mb: "10240"

additional_vm_extensions:

- spot-instance-m4-large

# Intenal Blobstoreを使う場合の定義

nfs_server:

instances: 0

instance_type:

id: t2.medium

persistent_disk:

size_mb: "51200"

additional_vm_extensions:

- spot-instance-t2-medium

EOF

config.ymlとvars.ymlを使ってPASの設定を行います。

cd ..

om configure-product \

--config ./pas/config.yml \

--vars-file ./pas/vars.yml

出力結果

configuring product...

setting up network

finished setting up network

setting properties

finished setting properties

applying resource configuration for the following jobs:

backup_restore

clock_global

cloud_controller

cloud_controller_worker

credhub

diego_brain

diego_cell

diego_database

doppler

ha_proxy

loggregator_trafficcontroller

mysql

mysql_monitor

mysql_proxy

nats

nfs_server

router

syslog_adapter

syslog_scheduler

tcp_router

uaa

errands are not provided, nothing to do here

finished configuring product

全てのプロパティは

om staged-config -p cf -c -rで確認できます。

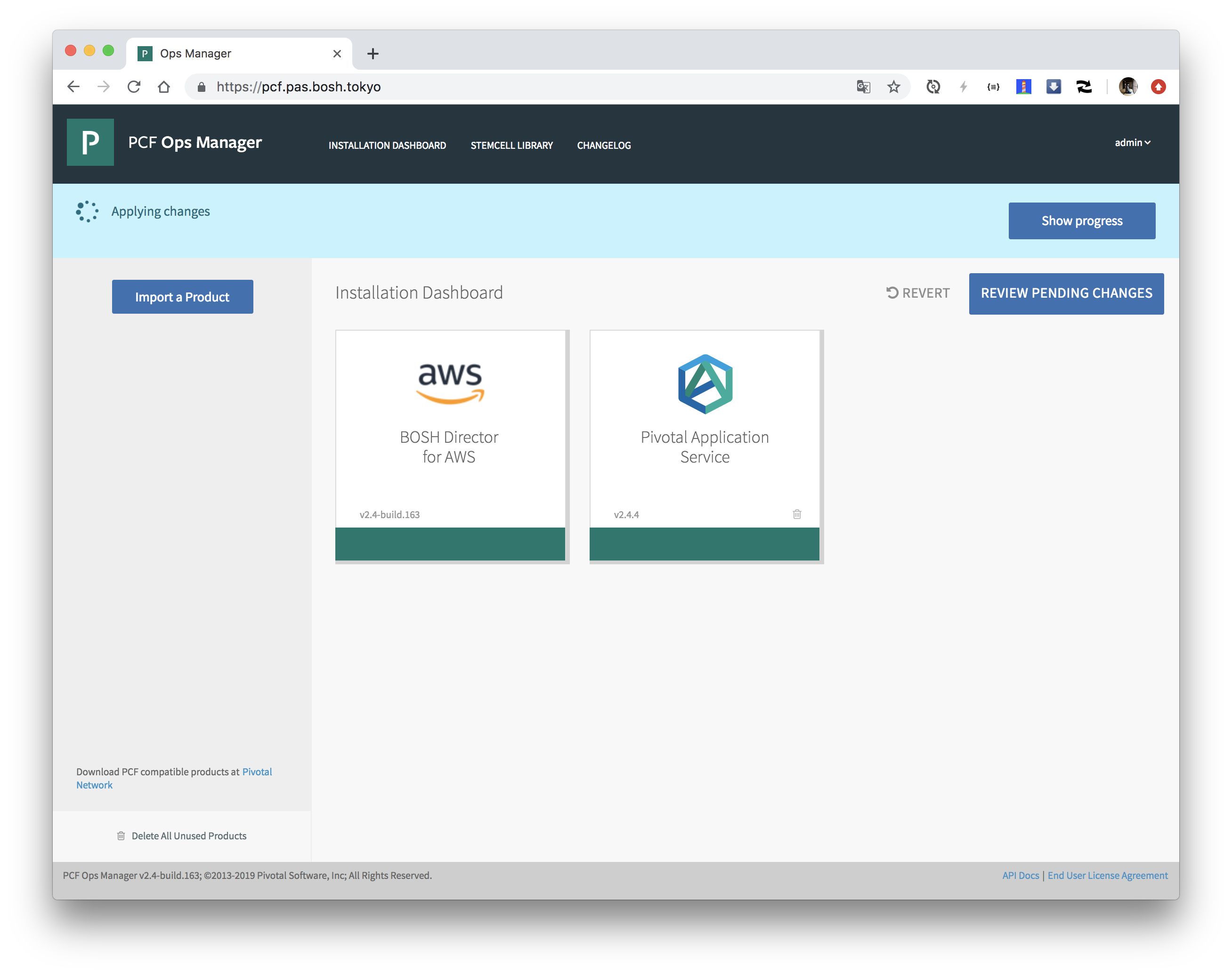

PASのデプロイ

設定を適用してBOSH DirectorおよびPASをインストールします。

om apply-changes

終わるまで待ちます。1-2時間くらいかかります...

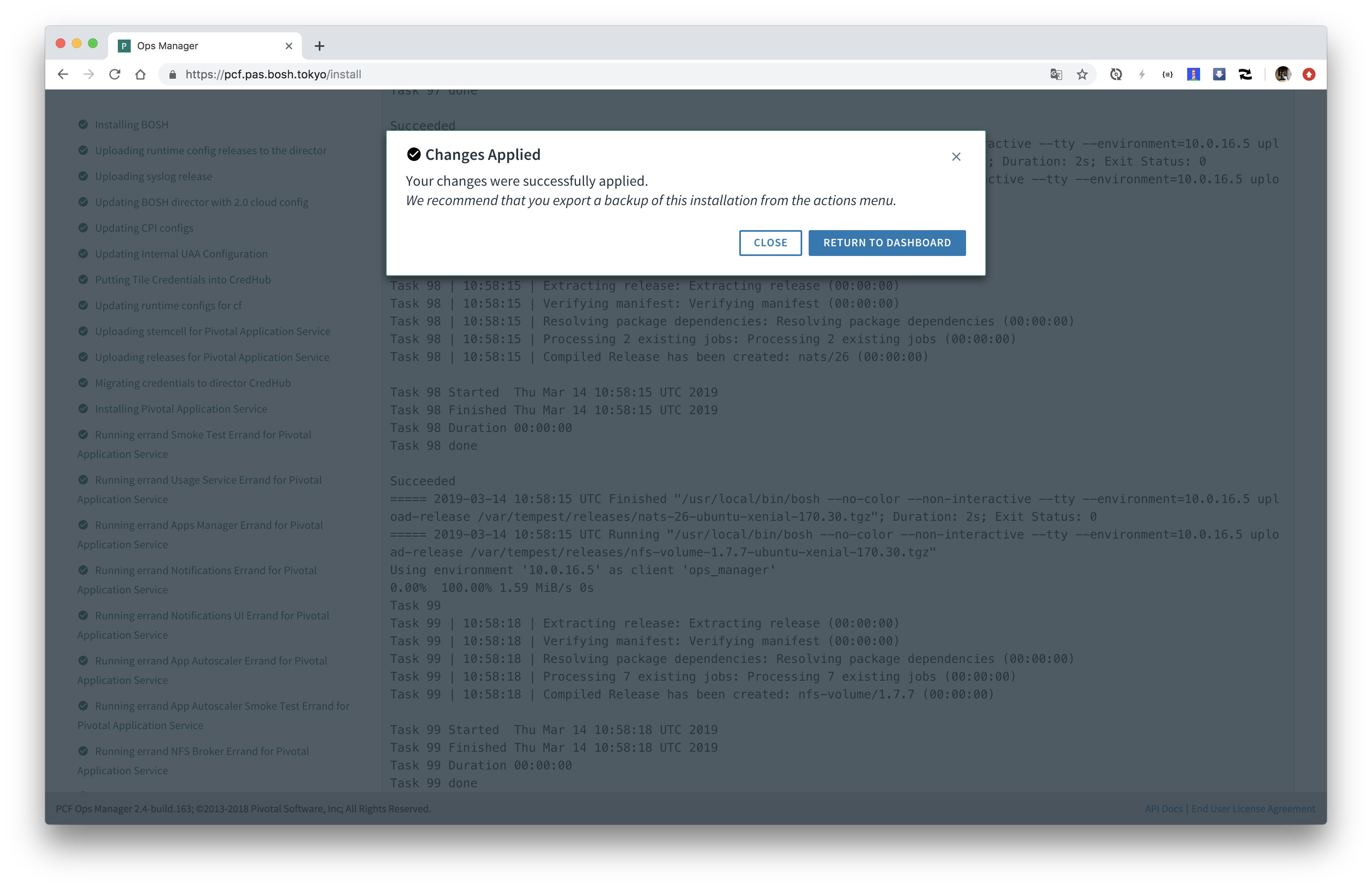

完了したら"Changes Applied"が表示されます。

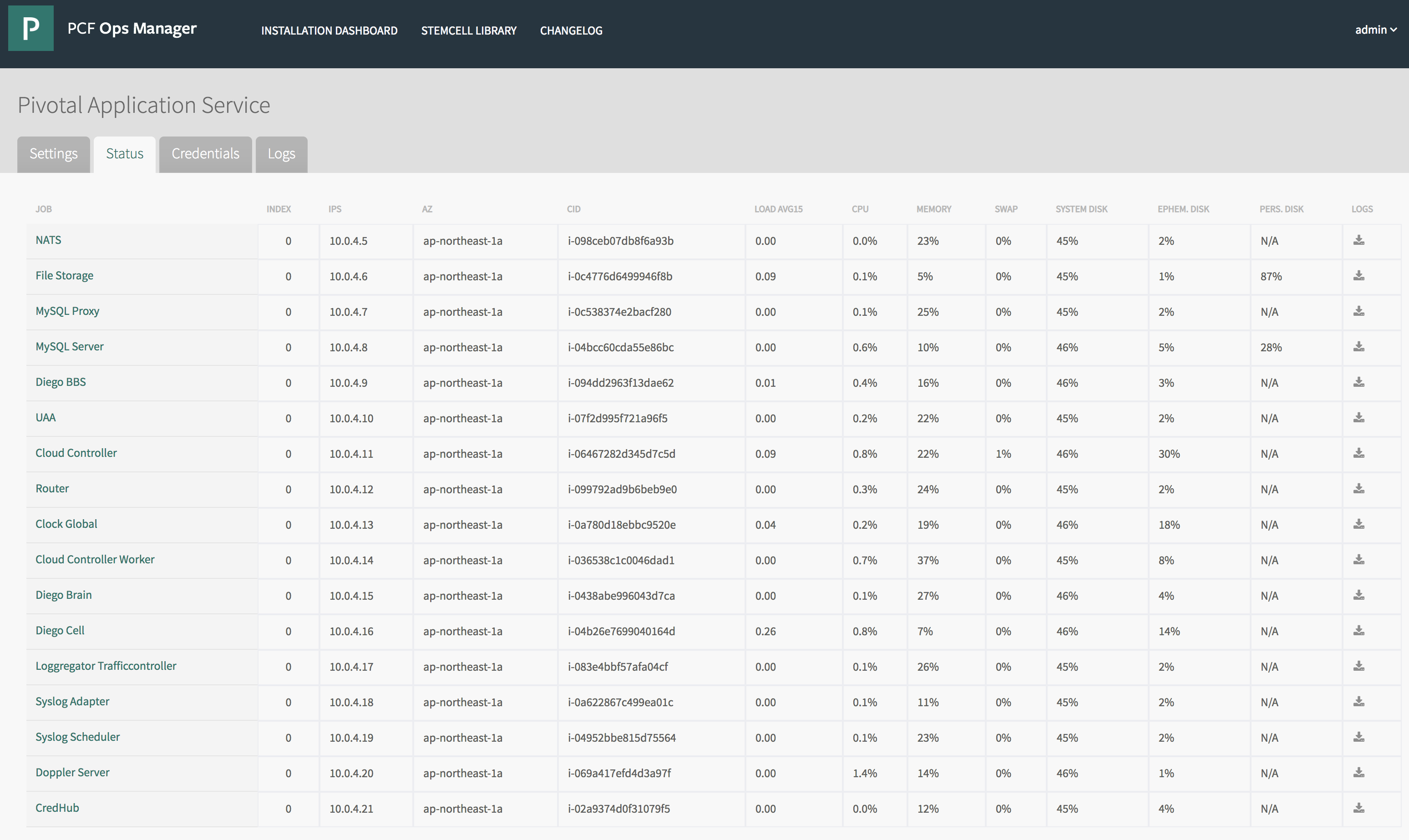

上記の設定では17個のVMが作成されます。

EC2のコンソールを確認して、Spot Instanceが適用されていることを確認してください。

参考までに、上記構成のPASをSpot Instanceで作成した場合は、EC2のVM料金は

t2.microx 9 => 0.0050 * 24 * 30 * 111 * 9 = 3,596円/月t2.mediumx 5 => 0.0095 * 24 * 30 * 111 * 5 = 3,796円/月m4.largex 2 => 0.0340 * 24 * 30 * 111 * 2 = 5,435円/月r4.xlargex 1 => 0.0700 * 24 * 30 * 111 * 1 = 5,594円/月計 18,421円/月 です。

ここまでのコンポーネント図は次のようになります。

PlantUML(参考)

@startuml

package "public" {

package "az1 (10.0.0.0/24)" {

node "Ops Manager"

rectangle "web-lb-1"

rectangle "ssh-lb-1"

boundary "NAT Gateway"

}

package "az2 (10.0.1.0/24)" {

rectangle "web-lb-2"

rectangle "ssh-lb-2"

}

package "az3 (10.0.2.0/24)" {

rectangle "web-lb-3"

rectangle "ssh-lb-3"

}

}

package "infrastructure" {

package "az2 (10.0.16.16/28)" {

}

package "az3 (10.0.16.32/28)" {

}

package "az1 (10.0.16.0/28)" {

node "BOSH Director"

}

}

package "deployment" {

package "az1 (10.0.4.0/24)" {

node "NATS"

node "Router"

database "File Storage"

package "MySQL" {

node "MySQL Proxy"

database "MySQL Server"

}

package "CAPI" {

node "Cloud Controller"

node "Clock Global"

node "Cloud Controller Worker"

}

package "Diego" {

node "Diego Brain"

node "DiegoCell" {

(app3)

(app2)

(app1)

}

node "Diego BBS"

}

package "Loggregator" {

node "Loggregator Trafficcontroller"

node "Syslog Adapter"

node "Syslog Scheduler"

node "Doppler Server"

}

node "UAA"

node "CredHub"

}

package "az2 (10.0.5.0/24)" {

}

package "az3 (10.0.6.0/24)" {

}

}

package "services" {

package "az3 (10.0.10.0/24)" {

}

package "az2 (10.0.9.0/24)" {

}

package "az1 (10.0.8.0/24)" {

}

}

boundary "Internet Gateway"

node firehose

actor User #red

actor Developer #blue

actor Operator #green

User -[#red]--> [web-lb-1]

User -[#red]--> [web-lb-2]

User -[#red]--> [web-lb-3]

Developer -[#blue]--> [web-lb-1] : "cf push"

Developer -[#blue]--> [web-lb-2]

Developer -[#blue]--> [web-lb-3]

Developer -[#magenta]--> [ssh-lb-1] : "cf ssh"

Developer -[#magenta]--> [ssh-lb-2]

Developer -[#magenta]--> [ssh-lb-3]

Operator -[#green]--> [Ops Manager]

public -up-> [Internet Gateway]

infrastructure -> [NAT Gateway]

deployment -> [NAT Gateway]

services -> [NAT Gateway]

[Ops Manager] .> [BOSH Director] :bosh

[BOSH Director] .down.> deployment :create-vm

[BOSH Director] .down.> services :create-vm

[web-lb-1] -[#red]-> Router

[web-lb-1] -[#blue]-> Router

[web-lb-2] -[#red]-> Router

[web-lb-2] -[#blue]-> Router

[web-lb-3] -[#red]-> Router

[web-lb-3] -[#blue]-> Router

[ssh-lb-1] -[#magenta]-> [Diego Brain]

[ssh-lb-2] -[#magenta]-> [Diego Brain]

[ssh-lb-3] -[#magenta]-> [Diego Brain]

Router -[#red]-> app1

Router -[#blue]-> [Cloud Controller]

Router -[#blue]-> [UAA]

[Diego Brain] -[#magenta]-> app2

[Cloud Controller] --> [MySQL Proxy]

[Cloud Controller] <-left-> [Cloud Controller Worker]

[Cloud Controller] <-right-> [Clock Global]

[Cloud Controller] -up-> [File Storage]

[Clock Global] <-> [Diego BBS]

[UAA] --> [MySQL Proxy]

[CredHub] -up-> [MySQL Proxy]

[Diego BBS] --> [MySQL Proxy]

[MySQL Proxy] --> [MySQL Server]

[Diego Brain] <-> [Diego BBS]

[Diego Brain] <--> DiegoCell

DiegoCell -up-> NATS

DiegoCell -> CredHub

NATS -up-> Router

[Doppler Server] --> [Loggregator Trafficcontroller]

[Loggregator Trafficcontroller] -right-> [Syslog Adapter]

[Syslog Adapter] -up-> [Syslog Scheduler]

[Loggregator Trafficcontroller] --> firehose

Diego .> [Doppler Server] : metrics

CAPI .> [Doppler Server] : metrics

Router .> [Doppler Server] : metrics

app1 ..> [Doppler Server] : log&metrics

app2 ..> [Doppler Server] : log&metrics

app3 ..> [Doppler Server] : log&metrics

@enduml

PASへのログイン

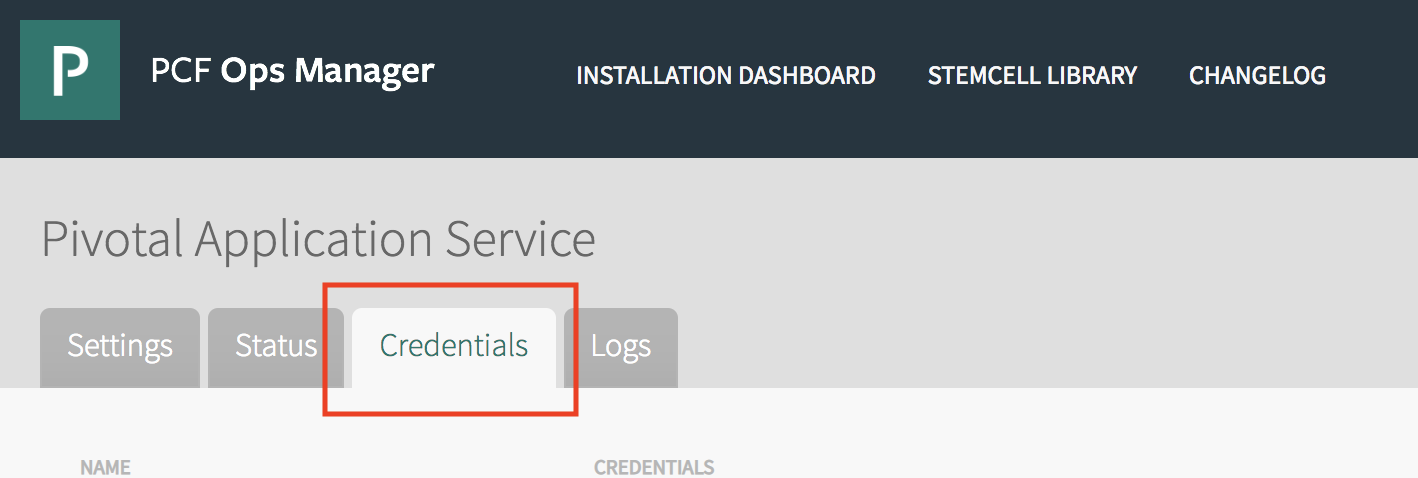

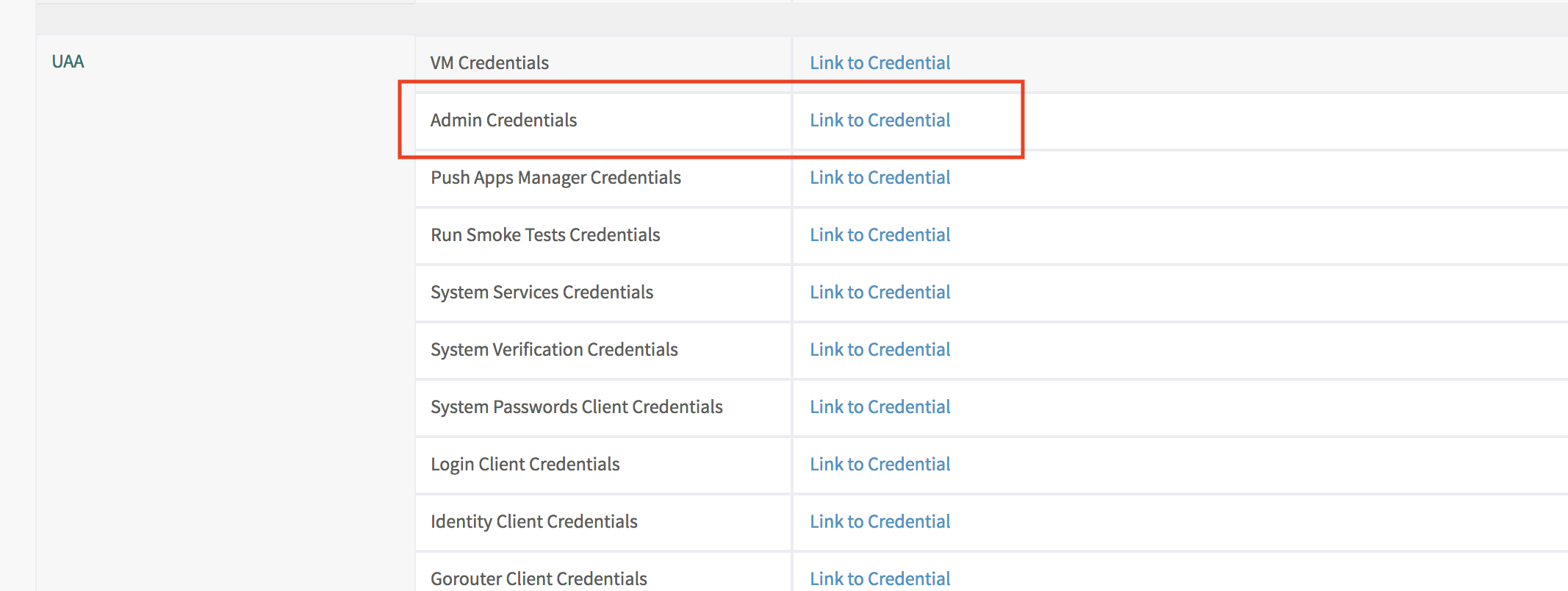

"Pivotal Application Service"タイルの"Credentials"タブから"UAA"の"Admin Credentials"にadminユーザーのパスワードが記載されています。

omコマンドでも取得可能です。

ADMIN_PASSWORD=$(om credentials -p cf -c .uaa.admin_credentials --format json | jq -r .password)

cf loginコマンドでログインしてください。APIサーバーのURLはhttps://api.$(terraform output sys_domain)です。

API_URL=https://api.$(terraform output sys_domain)

cf login -a ${API_URL} -u admin -p ${ADMIN_PASSWORD}

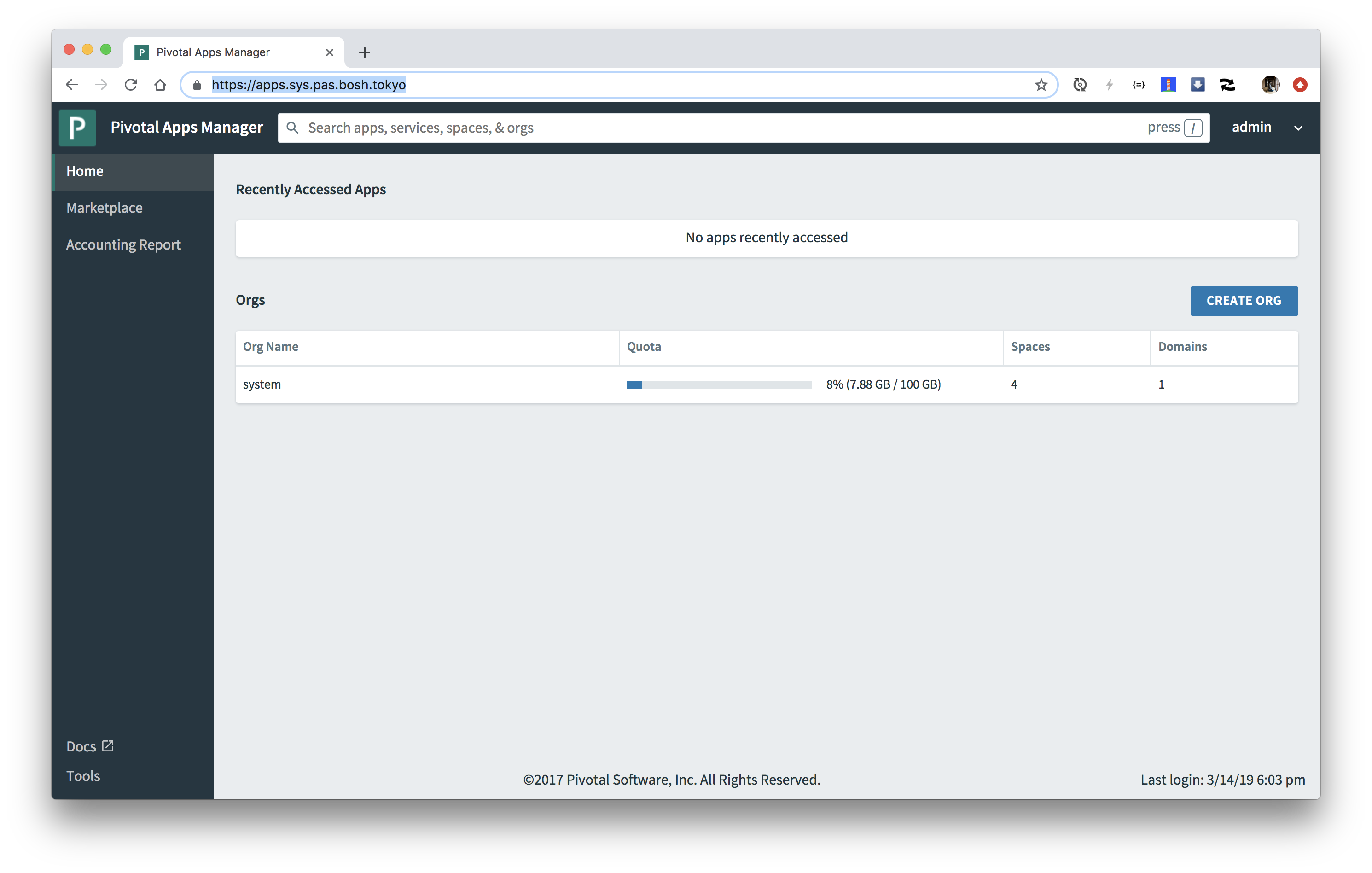

Apps Managerへのアクセス

Apps ManagerのURLはhttps://apps.$(terraform output sys_domain)です。ブラウザでアクセスしてください。

cf loginと同じアカウントでログインしてください。

PASを使ってみる

サンプルアプリをダウンロードしてください。

wget https://gist.github.com/making/fca49149aea3a7307b293685ba20c7b7/raw/6daab9a0a88fe0f36072ca4d1ee622d2354f3505/pcf-ers-demo1-0.0.1-SNAPSHOT.jar

cf push <アプリ名> -p <jarのファイルパス>でJavaアプリケーションをPASにデプロイします。

cf push attendees -p pcf-ers-demo1-0.0.1-SNAPSHOT.jar -m 768m

出力結果

admin としてアプリ attendees を組織 system / スペース system にプッシュしています...

アプリ情報を取得しています...

これらの属性でアプリを作成しています...

+ 名前: attendees

パス: /private/tmp/pcf-ers-demo1-0.0.1-SNAPSHOT.jar

+ メモリー: 768M

経路:

+ attendees.apps.pas.bosh.tokyo

アプリ attendees を作成しています...

経路をマップしています...

ローカル・ファイルをリモート・キャッシュと比較しています...

Packaging files to upload...

ファイルをアップロードしています...

681.36 KiB / 681.36 KiB [============================================================================================================================================================================================================================] 100.00% 1s

API がファイルの処理を完了するのを待機しています...

アプリをステージングし、ログをトレースしています...

Downloading binary_buildpack...

Downloading ruby_buildpack...

Downloading staticfile_buildpack...

Downloading java_buildpack_offline...

Downloading python_buildpack...

Downloaded ruby_buildpack

Downloaded staticfile_buildpack

Downloading go_buildpack...

Downloaded java_buildpack_offline

Downloading php_buildpack...

Downloading nodejs_buildpack...

Downloaded python_buildpack

Downloading dotnet_core_buildpack...

Downloaded binary_buildpack

Downloaded php_buildpack

Downloaded go_buildpack

Downloaded nodejs_buildpack

Downloaded dotnet_core_buildpack

Cell 7835f3b0-4951-4d38-a70f-1cf3d60fd9ab creating container for instance ce80e22f-a0f3-40f8-8ccb-4953784a9a0f

Cell 7835f3b0-4951-4d38-a70f-1cf3d60fd9ab successfully created container for instance ce80e22f-a0f3-40f8-8ccb-4953784a9a0f

Downloading app package...

Downloaded app package (34.4M)

-----> Java Buildpack v4.16.1 (offline) | https://github.com/cloudfoundry/java-buildpack.git#41b8ff8

-----> Downloading Jvmkill Agent 1.16.0_RELEASE from https://java-buildpack.cloudfoundry.org/jvmkill/bionic/x86_64/jvmkill-1.16.0_RELEASE.so (found in cache)

-----> Downloading Open Jdk JRE 1.8.0_192 from https://java-buildpack.cloudfoundry.org/openjdk/bionic/x86_64/openjdk-1.8.0_192.tar.gz (found in cache)

Expanding Open Jdk JRE to .java-buildpack/open_jdk_jre (1.1s)

JVM DNS caching disabled in lieu of BOSH DNS caching

-----> Downloading Open JDK Like Memory Calculator 3.13.0_RELEASE from https://java-buildpack.cloudfoundry.org/memory-calculator/bionic/x86_64/memory-calculator-3.13.0_RELEASE.tar.gz (found in cache)

Loaded Classes: 17720, Threads: 250

-----> Downloading Client Certificate Mapper 1.8.0_RELEASE from https://java-buildpack.cloudfoundry.org/client-certificate-mapper/client-certificate-mapper-1.8.0_RELEASE.jar (found in cache)

-----> Downloading Container Security Provider 1.16.0_RELEASE from https://java-buildpack.cloudfoundry.org/container-security-provider/container-security-provider-1.16.0_RELEASE.jar (found in cache)

-----> Downloading Spring Auto Reconfiguration 2.5.0_RELEASE from https://java-buildpack.cloudfoundry.org/auto-reconfiguration/auto-reconfiguration-2.5.0_RELEASE.jar (found in cache)

Exit status 0

Uploading droplet, build artifacts cache...

Uploading droplet...

Uploading build artifacts cache...

Uploaded build artifacts cache (128B)

Uploaded droplet (81.6M)

Uploading complete

Cell 7835f3b0-4951-4d38-a70f-1cf3d60fd9ab stopping instance ce80e22f-a0f3-40f8-8ccb-4953784a9a0f

Cell 7835f3b0-4951-4d38-a70f-1cf3d60fd9ab destroying container for instance ce80e22f-a0f3-40f8-8ccb-4953784a9a0f

アプリが開始するのを待機しています...

Cell 7835f3b0-4951-4d38-a70f-1cf3d60fd9ab successfully destroyed container for instance ce80e22f-a0f3-40f8-8ccb-4953784a9a0f

名前: attendees

要求された状態: started

経路: attendees.apps.pas.bosh.tokyo

最終アップロード日時: Thu 14 Mar 20:40:16 JST 2019

スタック: cflinuxfs3

ビルドパック: client-certificate-mapper=1.8.0_RELEASE container-security-provider=1.16.0_RELEASE java-buildpack=v4.16.1-offline-https://github.com/cloudfoundry/java-buildpack.git#41b8ff8 java-main java-opts java-security

jvmkill-agent=1.16.0_RELEASE open-jd...

タイプ: web

インスタンス: 1/1

メモリー使用量: 768M

開始コマンド: JAVA_OPTS="-agentpath:$PWD/.java-buildpack/open_jdk_jre/bin/jvmkill-1.16.0_RELEASE=printHeapHistogram=1 -Djava.io.tmpdir=$TMPDIR -Djava.ext.dirs=$PWD/.java-buildpack/container_security_provider:$PWD/.java-buildpack/open_jdk_jre/lib/ext

-Djava.security.properties=$PWD/.java-buildpack/java_security/java.security $JAVA_OPTS" && CALCULATED_MEMORY=$($PWD/.java-buildpack/open_jdk_jre/bin/java-buildpack-memory-calculator-3.13.0_RELEASE -totMemory=$MEMORY_LIMIT

-loadedClasses=18499 -poolType=metaspace -stackThreads=250 -vmOptions="$JAVA_OPTS") && echo JVM Memory Configuration: $CALCULATED_MEMORY && JAVA_OPTS="$JAVA_OPTS $CALCULATED_MEMORY" && MALLOC_ARENA_MAX=2 SERVER_PORT=$PORT eval exec

$PWD/.java-buildpack/open_jdk_jre/bin/java $JAVA_OPTS -cp $PWD/. org.springframework.boot.loader.JarLauncher

状態 開始日時 cpu メモリー ディスク 詳細

#0 実行 2019-03-14T11:40:38Z 0.0% 768M の中の 188.1M 1G の中の 164.8M

ブラウザでhttps://attendees.$(terraform output apps_domain)にアクセスしてください。

cf sshでコンテナ内にsshでアクセスできます。

cf ssh attendees

続きは

https://github.com/Pivotal-Field-Engineering/pcf-ers-demo/tree/master/Labs

へ。

OpsManagerへのSSH

OpsManagerのVMにSSHでログインするためのスクリプトを作成します。

export OM_TARGET=$(terraform output ops_manager_dns)

OPS_MANAGER_SSH_PRIVATE_KEY=$(terraform output ops_manager_ssh_private_key)

cat <<EOF > ssh-opsman.sh

#!/bin/bash

cat << KEY > opsman.pem

${OPS_MANAGER_SSH_PRIVATE_KEY}

KEY

chmod 600 opsman.pem

ssh -i opsman.pem -o "StrictHostKeyChecking=no" -l ubuntu ${OM_TARGET}

EOF

chmod +x ssh-opsman.sh

ssh-opsman.shを実行して、OpsManager VMにログインしてみてください。

./ssh-opsman.sh

出力結果

Warning: Permanently added 'pcf.pas.bosh.tokyo,13.230.80.142' (ECDSA) to the list of known hosts.

Unauthorized use is strictly prohibited. All access and activity

is subject to logging and monitoring.

Welcome to Ubuntu 16.04.5 LTS (GNU/Linux 4.15.0-45-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

Last login: Thu Mar 14 11:44:46 UTC 2019 from 220.240.200.125 on pts/0

Last login: Thu Mar 14 11:46:50 2019 from 220.240.200.125

ubuntu@ip-10-0-0-143:~$

ログアウトしてください。

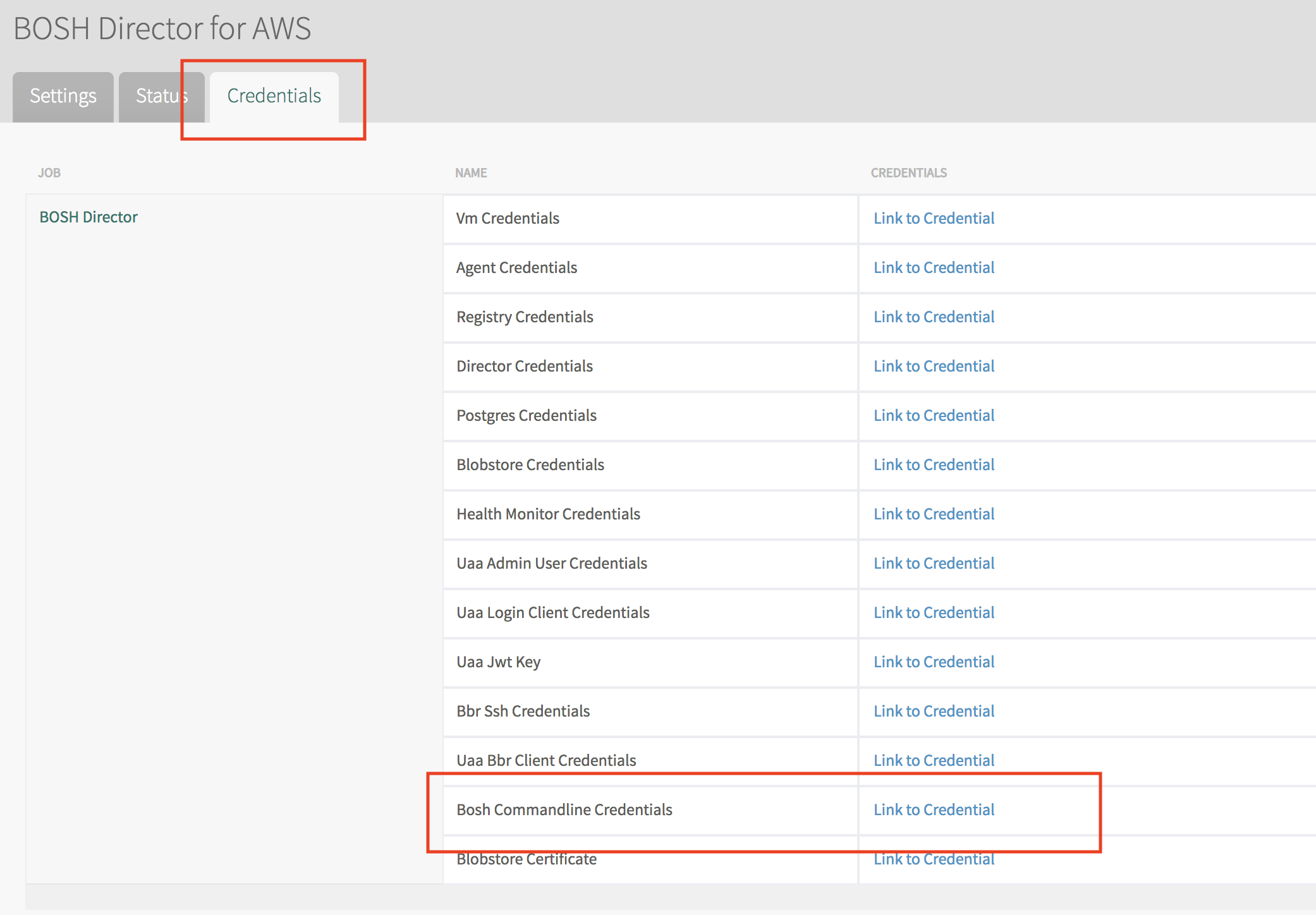

BOSH CLIの設定

OpsManagerのVM内でbosh CLIを使うための設定を行います。

この

BOSH_ENVIRONMENTBOSH_CLIENTBOSH_CLIENT_SECRETBOSH_CA_CERT

の4つの環境変数を設定することでbosh CLIでデプロイされたBOSH Diretorにアクセスすることができます。

あえてCLIで設定します。

export OM_TARGET=$(terraform output ops_manager_dns)

OPS_MANAGER_SSH_PRIVATE_KEY=$(terraform output ops_manager_ssh_private_key)

cat <<EOF > opsman.pem

${OPS_MANAGER_SSH_PRIVATE_KEY}

EOF

chmod 600 opsman.pem

BOSH_CLI=$(om curl -s -p "/api/v0/deployed/director/credentials/bosh_commandline_credentials" | jq -r '.credential')

ssh -q -i opsman.pem \

-o "StrictHostKeyChecking=no" \

ubuntu@${OM_TARGET} "echo $BOSH_CLI | sed 's/ /\n/g' | sed 's/^/export /g' | sed '/bosh/d' | sudo tee /etc/profile.d/bosh.sh" > /dev/null

ssh-opsman.shを実行して、OpsManager VMにログインして、bosh envコマンドを実行してください。

bosh env

出力結果

Name p-bosh

UUID 1af68b6f-6409-4b64-8add-d8ecf4dd167a

Version 268.2.2 (00000000)

CPI aws_cpi

Features compiled_package_cache: disabled

config_server: enabled

local_dns: enabled

power_dns: disabled

snapshots: disabled

User ops_manager

Succeeded

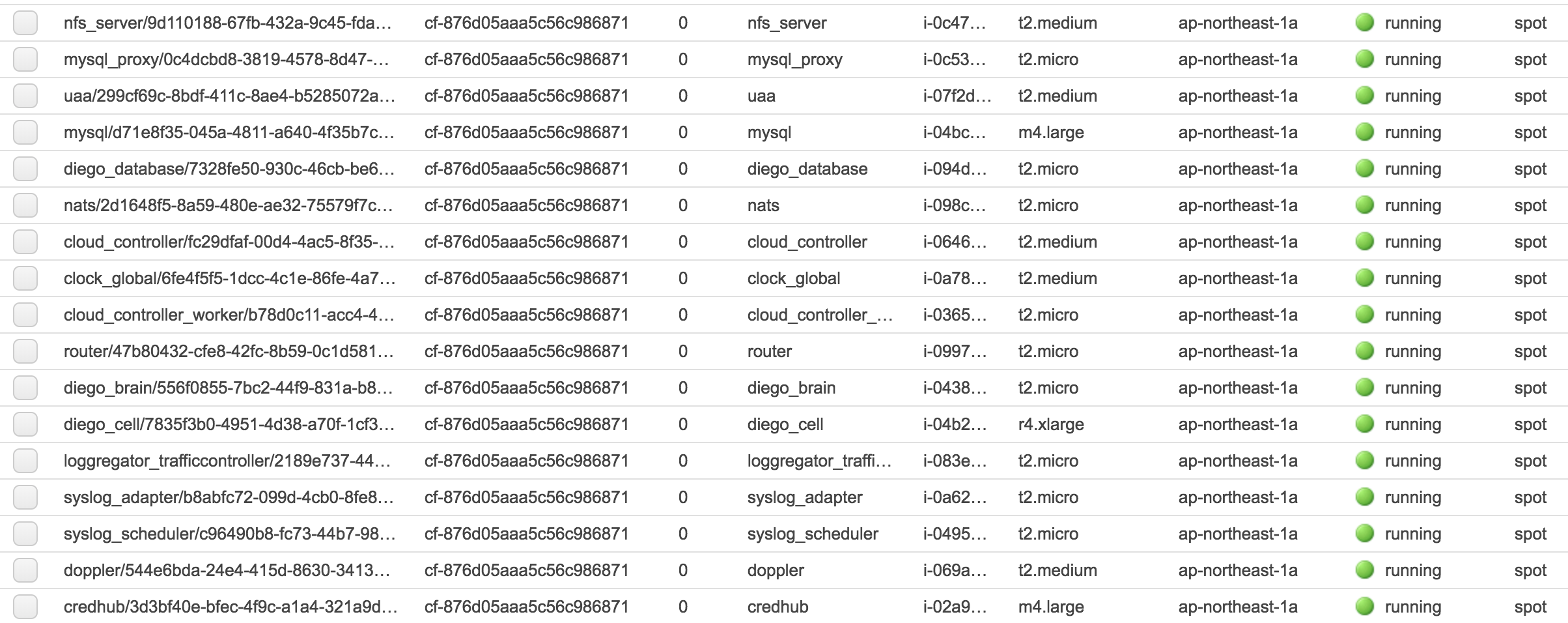

bosh vmsでBOSH Directorが管理しているVMの一覧を確認できます。

bosh vms

出力結果

Using environment '10.0.16.5' as client 'ops_manager'

Task 126. Done

Deployment 'cf-876d05aaa5c56c986871'

Instance Process State AZ IPs VM CID VM Type Active

clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a running ap-northeast-1a 10.0.4.13 i-0a780d18ebbc9520e t2.medium true

cloud_controller/fc29dfaf-00d4-4ac5-8f35-8c55208d0416 running ap-northeast-1a 10.0.4.11 i-06467282d345d7c5d t2.medium true

cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 running ap-northeast-1a 10.0.4.14 i-036538c1c0046dad1 t2.micro true

credhub/3d3bf40e-bfec-4f9c-a1a4-321a9dd13ee8 running ap-northeast-1a 10.0.4.21 i-02a9374d0f31079f5 m4.large true

diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e running ap-northeast-1a 10.0.4.15 i-0438abe996043d7ca t2.micro true

diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab running ap-northeast-1a 10.0.4.16 i-04b26e7699040164d r4.xlarge true

diego_database/7328fe50-930c-46cb-be6d-31dbf2cf8dcd running ap-northeast-1a 10.0.4.9 i-094dd2963f13dae62 t2.micro true

doppler/544e6bda-24e4-415d-8630-341335e3c1f8 running ap-northeast-1a 10.0.4.20 i-069a417efd4d3a97f t2.medium true

loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 running ap-northeast-1a 10.0.4.17 i-083e4bbf57afa04cf t2.micro true

mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b running ap-northeast-1a 10.0.4.8 i-04bcc60cda55e86bc m4.large true

mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 running ap-northeast-1a 10.0.4.7 i-0c538374e2bacf280 t2.micro true

nats/2d1648f5-8a59-480e-ae32-75579f7c8237 running ap-northeast-1a 10.0.4.5 i-098ceb07db8f6a93b t2.micro true

nfs_server/9d110188-67fb-432a-9c45-fda22d374942 running ap-northeast-1a 10.0.4.6 i-0c4776d6499946f8b t2.medium true

router/47b80432-cfe8-42fc-8b59-0c1d581273c0 running ap-northeast-1a 10.0.4.12 i-099792ad9b6beb9e0 t2.micro true

syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a running ap-northeast-1a 10.0.4.18 i-0a622867c499ea01c t2.micro true

syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd running ap-northeast-1a 10.0.4.19 i-04952bbe815d75564 t2.micro true

uaa/299cf69c-8bdf-411c-8ae4-b5285072a47d running ap-northeast-1a 10.0.4.10 i-07f2d995f721a96f5 t2.medium true

17 vms

Succeeded

bosh instances --psコマンドで、プロセス一覧を確認できます。

bosh instances --ps

出力結果

Using environment '10.0.16.5' as client 'ops_manager'

Task 127. Done

Deployment 'cf-876d05aaa5c56c986871'

Instance Process Process State AZ IPs

clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a - running ap-northeast-1a 10.0.4.13

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ cc_deployment_updater running - -

~ cloud_controller_clock running - -

~ log-cache-scheduler running - -

~ loggregator_agent running - -

~ metric_registrar_orchestrator running - -

cloud_controller/fc29dfaf-00d4-4ac5-8f35-8c55208d0416 - running ap-northeast-1a 10.0.4.11

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ cloud_controller_ng running - -

~ cloud_controller_worker_local_1 running - -

~ cloud_controller_worker_local_2 running - -

~ loggregator_agent running - -

~ nginx_cc running - -

~ route_registrar running - -

~ routing-api running - -

~ statsd_injector running - -

cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 - running ap-northeast-1a 10.0.4.14

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ cloud_controller_worker_1 running - -

~ loggregator_agent running - -

credhub/3d3bf40e-bfec-4f9c-a1a4-321a9dd13ee8 - running ap-northeast-1a 10.0.4.21

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ credhub running - -

~ loggregator_agent running - -

diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e - running ap-northeast-1a 10.0.4.15

~ auctioneer running - -

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ cc_uploader running - -

~ file_server running - -

~ loggregator_agent running - -

~ service-discovery-controller running - -

~ ssh_proxy running - -

~ tps_watcher running - -

diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab - running ap-northeast-1a 10.0.4.16

~ bosh-dns running - -

~ bosh-dns-adapter running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ garden running - -

~ iptables-logger running - -

~ loggregator_agent running - -

~ netmon running - -

~ nfsv3driver running - -

~ rep running - -

~ route_emitter running - -

~ silk-daemon running - -

~ vxlan-policy-agent running - -

diego_database/7328fe50-930c-46cb-be6d-31dbf2cf8dcd - running ap-northeast-1a 10.0.4.9

~ bbs running - -

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ locket running - -

~ loggregator_agent running - -

~ policy-server running - -

~ policy-server-internal running - -

~ route_registrar running - -

~ silk-controller running - -

doppler/544e6bda-24e4-415d-8630-341335e3c1f8 - running ap-northeast-1a 10.0.4.20

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ doppler running - -

~ log-cache running - -

~ log-cache-cf-auth-proxy running - -

~ log-cache-expvar-forwarder running - -

~ log-cache-gateway running - -

~ log-cache-nozzle running - -

~ loggregator_agent running - -

~ metric_registrar_endpoint_worker running - -

~ metric_registrar_log_worker running - -

~ route_registrar running - -

loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 - running ap-northeast-1a 10.0.4.17

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ bosh-system-metrics-forwarder running - -

~ loggregator_agent running - -

~ loggregator_trafficcontroller running - -

~ reverse_log_proxy running - -

~ reverse_log_proxy_gateway running - -

~ route_registrar running - -

mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b - running ap-northeast-1a 10.0.4.8

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ cluster-health-logger running - -

~ galera-agent running - -

~ galera-init running - -

~ gra-log-purger running - -

~ loggregator_agent running - -

~ mysql-diag-agent running - -

~ mysql-metrics running - -

mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 - running ap-northeast-1a 10.0.4.7

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

~ proxy running - -

~ route_registrar running - -

nats/2d1648f5-8a59-480e-ae32-75579f7c8237 - running ap-northeast-1a 10.0.4.5

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

~ nats running - -

nfs_server/9d110188-67fb-432a-9c45-fda22d374942 - running ap-northeast-1a 10.0.4.6

~ blobstore_nginx running - -

~ blobstore_url_signer running - -

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

~ route_registrar running - -

router/47b80432-cfe8-42fc-8b59-0c1d581273c0 - running ap-northeast-1a 10.0.4.12

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ gorouter running - -

~ loggregator_agent running - -

syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a - running ap-northeast-1a 10.0.4.18

~ adapter running - -

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd - running ap-northeast-1a 10.0.4.19

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

~ scheduler running - -

uaa/299cf69c-8bdf-411c-8ae4-b5285072a47d - running ap-northeast-1a 10.0.4.10

~ bosh-dns running - -

~ bosh-dns-healthcheck running - -

~ bosh-dns-resolvconf running - -

~ loggregator_agent running - -

~ route_registrar running - -

~ statsd_injector running - -

~ uaa running - -

17 instances

Succeeded

PASの一時停止

bosh -d <deployment_name> stopでdeployment_name(PASの場合はcf-*********)以下の全てのプロセスを停止します。この場合はVMは起動したままです。--hardオプションをつけるとVMは削除されます。ただし永続ディスクは残るため、復元可能です。

MySQLのインスタンス数を3にしている場合は、

bosh stop前に必ず1に戻してください。

bosh -d cf-*************** stop --hard -n

出力結果

Using environment '10.0.16.5' as client 'ops_manager'

Using deployment 'cf-876d05aaa5c56c986871'

Task 128

Task 128 | 11:57:36 | Preparing deployment: Preparing deployment (00:00:03)

Task 128 | 11:58:11 | Preparing package compilation: Finding packages to compile (00:00:01)

Task 128 | 11:58:12 | Updating instance nats: nats/2d1648f5-8a59-480e-ae32-75579f7c8237 (0) (canary) (00:00:42)

Task 128 | 11:58:54 | Updating instance nfs_server: nfs_server/9d110188-67fb-432a-9c45-fda22d374942 (0) (canary) (00:00:55)

Task 128 | 11:59:49 | Updating instance mysql_proxy: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0) (canary)

Task 128 | 11:59:49 | Updating instance mysql: mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b (0) (canary) (00:01:16)

Task 128 | 12:01:33 | Updating instance mysql_proxy: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0) (canary) (00:01:44)

Task 128 | 12:01:33 | Updating instance diego_database: diego_database/7328fe50-930c-46cb-be6d-31dbf2cf8dcd (0) (canary) (00:01:43)

Task 128 | 12:03:16 | Updating instance uaa: uaa/299cf69c-8bdf-411c-8ae4-b5285072a47d (0) (canary) (00:00:53)

Task 128 | 12:04:09 | Updating instance cloud_controller: cloud_controller/fc29dfaf-00d4-4ac5-8f35-8c55208d0416 (0) (canary) (00:01:58)

Task 128 | 12:06:07 | Updating instance router: router/47b80432-cfe8-42fc-8b59-0c1d581273c0 (0) (canary) (00:01:00)

Task 128 | 12:07:07 | Updating instance cloud_controller_worker: cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 (0) (canary)

Task 128 | 12:07:07 | Updating instance diego_brain: diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e (0) (canary)

Task 128 | 12:07:07 | Updating instance doppler: doppler/544e6bda-24e4-415d-8630-341335e3c1f8 (0) (canary)

Task 128 | 12:07:07 | Updating instance diego_cell: diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab (0) (canary)

Task 128 | 12:07:07 | Updating instance loggregator_trafficcontroller: loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 (0) (canary)

Task 128 | 12:07:07 | Updating instance syslog_adapter: syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a (0) (canary)

Task 128 | 12:07:07 | Updating instance clock_global: clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a (0) (canary)

Task 128 | 12:07:07 | Updating instance syslog_scheduler: syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd (0) (canary)

Task 128 | 12:07:07 | Updating instance credhub: credhub/3d3bf40e-bfec-4f9c-a1a4-321a9dd13ee8 (0) (canary) (00:00:54)

Task 128 | 12:08:10 | Updating instance doppler: doppler/544e6bda-24e4-415d-8630-341335e3c1f8 (0) (canary) (00:01:03)

Task 128 | 12:08:33 | Updating instance syslog_scheduler: syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd (0) (canary) (00:01:26)

Task 128 | 12:08:42 | Updating instance diego_brain: diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e (0) (canary) (00:01:35)

Task 128 | 12:08:59 | Updating instance syslog_adapter: syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a (0) (canary) (00:01:52)

Task 128 | 12:09:11 | Updating instance clock_global: clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a (0) (canary) (00:02:04)

Task 128 | 12:09:33 | Updating instance loggregator_trafficcontroller: loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 (0) (canary) (00:02:26)

Task 128 | 12:09:42 | Updating instance cloud_controller_worker: cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 (0) (canary) (00:02:35)

Task 128 | 12:18:54 | Updating instance diego_cell: diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab (0) (canary) (00:11:47)

Task 128 Started Thu Mar 14 11:57:36 UTC 2019

Task 128 Finished Thu Mar 14 12:18:54 UTC 2019

Task 128 Duration 00:21:18

Task 128 done

Succeeded

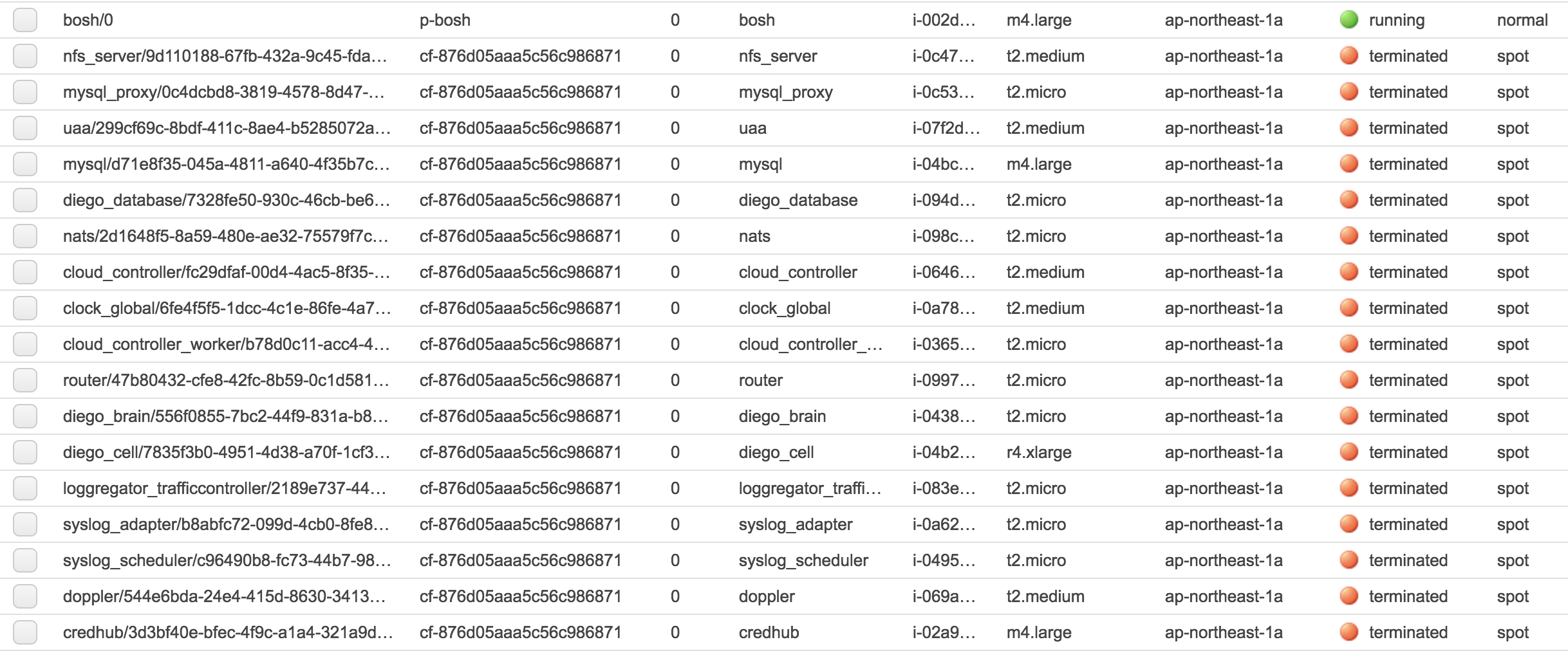

EC2のコンソールを見るとPASのインスタンスが削除されていることを確認できます。

PASの再開

bosh -d cf-*************** start -n

出力結果

Using environment '10.0.16.5' as client 'ops_manager'

Using deployment 'cf-876d05aaa5c56c986871'

Task 134

Task 134 | 12:25:57 | Preparing deployment: Preparing deployment (00:00:03)

Task 134 | 12:26:33 | Preparing package compilation: Finding packages to compile (00:00:00)

Task 134 | 12:26:33 | Creating missing vms: nats/2d1648f5-8a59-480e-ae32-75579f7c8237 (0)

Task 134 | 12:26:33 | Creating missing vms: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0)

Task 134 | 12:26:33 | Creating missing vms: nfs_server/9d110188-67fb-432a-9c45-fda22d374942 (0)

Task 134 | 12:26:33 | Creating missing vms: mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b (0)

Task 134 | 12:26:33 | Creating missing vms: diego_database/7328fe50-930c-46cb-be6d-31dbf2cf8dcd (0)

Task 134 | 12:26:33 | Creating missing vms: uaa/299cf69c-8bdf-411c-8ae4-b5285072a47d (0)

Task 134 | 12:27:53 | Creating missing vms: nats/2d1648f5-8a59-480e-ae32-75579f7c8237 (0) (00:01:20)

Task 134 | 12:27:53 | Creating missing vms: cloud_controller/fc29dfaf-00d4-4ac5-8f35-8c55208d0416 (0)

Task 134 | 12:27:55 | Creating missing vms: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0) (00:01:22)

Task 134 | 12:27:55 | Creating missing vms: router/47b80432-cfe8-42fc-8b59-0c1d581273c0 (0)

Task 134 | 12:27:55 | Creating missing vms: uaa/299cf69c-8bdf-411c-8ae4-b5285072a47d (0) (00:01:22)

Task 134 | 12:27:55 | Creating missing vms: clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a (0)

Task 134 | 12:27:57 | Creating missing vms: diego_database/7328fe50-930c-46cb-be6d-31dbf2cf8dcd (0) (00:01:24)

Task 134 | 12:27:57 | Creating missing vms: cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 (0)

Task 134 | 12:28:07 | Creating missing vms: mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b (0) (00:01:34)

Task 134 | 12:28:07 | Creating missing vms: diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e (0)

Task 134 | 12:28:21 | Creating missing vms: nfs_server/9d110188-67fb-432a-9c45-fda22d374942 (0) (00:01:48)

Task 134 | 12:28:21 | Creating missing vms: diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab (0)

Task 134 | 12:29:06 | Creating missing vms: cloud_controller/fc29dfaf-00d4-4ac5-8f35-8c55208d0416 (0) (00:01:13)

Task 134 | 12:29:06 | Creating missing vms: loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 (0)

Task 134 | 12:29:08 | Creating missing vms: cloud_controller_worker/b78d0c11-acc4-4828-a8e3-0a10eedbeaa5 (0) (00:01:11)

Task 134 | 12:29:08 | Creating missing vms: syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a (0)

Task 134 | 12:29:09 | Creating missing vms: router/47b80432-cfe8-42fc-8b59-0c1d581273c0 (0) (00:01:14)

Task 134 | 12:29:09 | Creating missing vms: syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd (0)

Task 134 | 12:29:15 | Creating missing vms: clock_global/6fe4f5f5-1dcc-4c1e-86fe-4a77fb63f89a (0) (00:01:20)

Task 134 | 12:29:15 | Creating missing vms: doppler/544e6bda-24e4-415d-8630-341335e3c1f8 (0)

Task 134 | 12:29:16 | Creating missing vms: diego_brain/556f0855-7bc2-44f9-831a-b8096bd7c13e (0) (00:01:09)

Task 134 | 12:29:16 | Creating missing vms: credhub/3d3bf40e-bfec-4f9c-a1a4-321a9dd13ee8 (0)

Task 134 | 12:29:22 | Creating missing vms: diego_cell/7835f3b0-4951-4d38-a70f-1cf3d60fd9ab (0) (00:01:01)

Task 134 | 12:30:15 | Creating missing vms: loggregator_trafficcontroller/2189e737-448c-41fa-8bf5-46dd01d3b6f0 (0) (00:01:09)

Task 134 | 12:30:20 | Creating missing vms: syslog_adapter/b8abfc72-099d-4cb0-8fe8-e1883aa5808a (0) (00:01:12)

Task 134 | 12:30:21 | Creating missing vms: syslog_scheduler/c96490b8-fc73-44b7-983f-9aa73479f7bd (0) (00:01:12)

Task 134 | 12:30:22 | Creating missing vms: credhub/3d3bf40e-bfec-4f9c-a1a4-321a9dd13ee8 (0) (00:01:06)

Task 134 | 12:30:34 | Creating missing vms: doppler/544e6bda-24e4-415d-8630-341335e3c1f8 (0) (00:01:19)

Task 134 | 12:30:34 | Updating instance nats: nats/2d1648f5-8a59-480e-ae32-75579f7c8237 (0) (canary) (00:00:40)

Task 134 | 12:31:14 | Updating instance nfs_server: nfs_server/9d110188-67fb-432a-9c45-fda22d374942 (0) (canary) (00:00:44)

Task 134 | 12:31:58 | Updating instance mysql_proxy: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0) (canary)

Task 134 | 12:31:58 | Updating instance mysql: mysql/d71e8f35-045a-4811-a640-4f35b7c14d2b (0) (canary)

Task 134 | 12:32:38 | Updating instance mysql_proxy: mysql_proxy/0c4dcbd8-3819-4578-8d47-b7a053b29bd0 (0) (canary) (00:00:40)